1. I. Introduction

uman faces represent detailed sources of information such as identity, age, gender, intention, and reaction (Response of expression). Also, humans have a great inclination towards their face attractiveness [8]. Thus, there exist many cosmetic surgeries to improve face attractiveness by modifying face appearance, face color, and shape. However cosmetic surgery is more expensive and painful, therefore only rich people can afford it [7] [9] [10]. Extensive research is being carried out in this field for improving facial aesthetics. But face aesthetics is subjective. Thus, we need some solution that can generate or predict face appearance before surgery [13] [14]. The computer vision domain provides research for the improvement of facial aesthetics in the digital domain which is virtual improvement in facial aesthetics in images and video. We proposed a real time face feature reshaping technique for eyes, eyebrows, jaw, chin, nose, and lips. So that user can see their manipulated appearance in real-time.

2. II. Previous Work

Face detection is the most important phase in computer vision problems. Michael J. Jones Paul Viola [30] proposed a face detection framework. We categorized the face morphing method into two types: Model-based and non-model-based methods. The model-based method includes various face shape models such as the Active appearance model [24] [29], the Active Shape model [24], the Constrained Local Model [21], the 3D morphable face model [2][3][8] [18] and many more. The base face model [19] and surrey face model [19] are the most widely used 3D face model. The face shape model is constructed from the face, morph the model then fits onto the original face. The model construction and fitting are more difficult tasks that required lots of computation. The modelbased method provides a good result for real-time processing so widely used in image and video morphing. D. Kasat et. al [9] proposed a real-time morphing system that morphs the face feature in realtime using a Kinect sensor to stream input video. The system was able to morph the face features such as the jaw, chin, nose, mouth, and eyes in real-time. The Active appearance model-based method and moving least square method [35] are used for image deformation. The system degraded the performance in some illumination conditions and produce delay. Yuan Lin et. al [3] proposed a face-swapping method that does not require the same pose and appearance of the source and target image. The 3D model-based approach is used therefore allowing any render angle of a pose. The 3D model is constructed from the user's uploaded image and the swapping method is used to replace the character of the face. The result is accurate when the target object has nonfrontal faces. But the method is not able to handle large illumination differences between the source image and target image. A non-model-based method such as face morphing using a critical point filter [6] finds the critical value of the face and modifies that value using a critical point filter (CPF). The filter can filter image properties such as depth value, color, and intensity. The method is developed for two face images of the frontal face. The landmark points used are few to curtail the processing time required for a greater number of landmarks and large image size.

3. III. System Overview

This system is implemented using OpenCV with python 3.7.0. The main objective of this work is to reshape individual features of the face like eyebrows, eyes, nose, lip, chin, jaw, etc. in real-time with minimum delay. An image deformation algorithm is thus applied only on the face area of window size 200 × 200. The window size is fixed to reduce the response delay. The system flowchart is illustrated in figure 1. The working of the system begins with the capture of the real-time video using the device camera. As input to the system, we took the RGB video stream captured by the Sony Laptop (device) front camera at a width of 900 pixels, and convert the RGB video frame into a grayscale image for image deformation operation. We assumed that there is only 1 person (referred to as the actor) in front of the device camera, and take as input a set of face shape modification parameters from the user. Then, we detect the face using a face detection algorithm and identify the face features to localize the certain key feature point. Object detection using Histogram of Oriented Gradients with Linear SVM method [36] is being used to detect facial landmarks. Firstly, OpenCV is used to detect the face from the given input video frame, then pre-processed the image to maintain equal size with a width of 900 pixels.

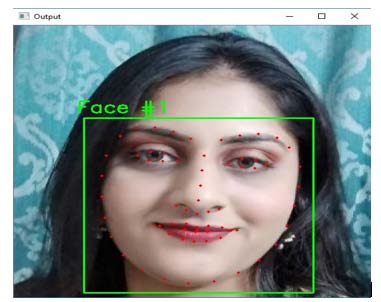

Fig. 2: Visualizing the 68 Facial Landmark Coordinates estimate the location of 68 (x, y)-coordinates that map to facial structures on the face. The indexes of the 68 coordinates can be visualized in figure 2. We can see that the red dots mapped to specific facial features, including the jaw, chin, lips, nose, eyes, and eyebrows. The end result detected facial landmarks in real-time with high accuracy predictions. The Moving Least square (MLS) [35] method is used to reconstruct a surface from a set of landmark points. MLS created deformations using affine similarity, and rigid transformations. These deformations are realistic and give the user the impression of manipulating the facial features in real-time with high speed. The method generates a new image frame by warping the corresponding pixels of the source points to the positions of the destination points. Image deformation performs in each and every video frame and generates an output video in real-time. The output from the system is live and a morphed RGB video stream where the required face shape modifications are performed.

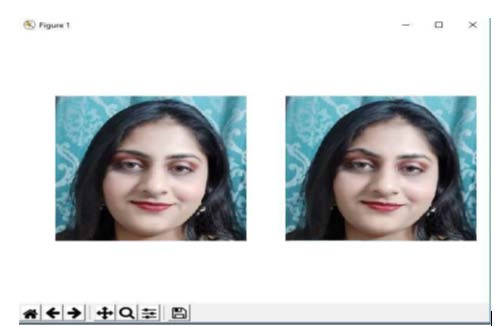

Fig. 3: Original image (left) and its deformation using the rigid MLS method (right). After deformation, the face eyebrow is reshape

4. IV. Result Analysis

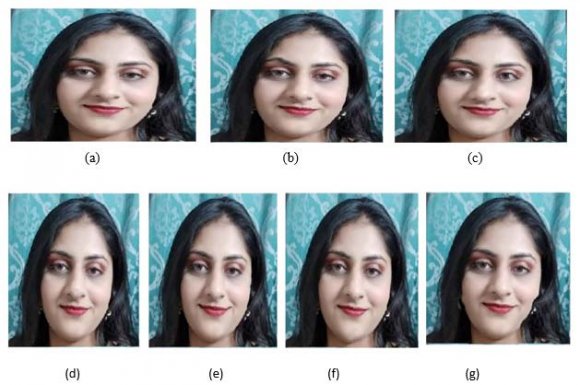

The result of the proposed work is projected in Figure 4 in which various feature such as eyebrow, eye, nose, lip, chin, and jaw are reshaped as per user requirement. The reshaped feature can be deployed by the common use for various applications such as in plastic beauty surgery in which user can visualize their face before cosmetic surgery. The user can change various parameters in real-time and visualize their face with a modified face feature. The system can also be used for face beautification applications, to have some fun with friends, to share on social media, and capture memorable moments. The best thing is we edit in realtime without using any external device. The system was evaluated for various profile face such as left, frontal, and right profile face. We also analyzed the time required to produce the output video. The result shows that in left and right profile face required more time to produce output video. Table 1 shows the Response time required to generate an output video of ten video frames in a millisecond. Figure 5 shows the time analysis graph of various profile faces.

5. V. Comparison with Previous Work

Work done by D. Kasat et al. [9], in which we can see similar effects (as shown in Figure 8) on face feature are reshaped using Kinect sensor for input video. In our works, (as shown in Figure 4) the system allows users to reshape individual face parameters in real-time without using any external device with less delay. In the existing system, we need to configure and connect the Microsoft Kinect sensor with the device therefore, the system portability is less compared to the proposed system. The quality of output and input images compared with Structural Similarity Measurement Index (SSIM).

The SSIM value of an existing and proposed system is shown in table 3. The existing system provides good quality results because they used Microsoft Kinect sensor for input video that provides a 3D view and depth of capture image. In our system, we used a device front camera that is enough to be capable good quality video. The graphical representation of the SSIM value is shown in figure 9. The comparison of the original face and deformed face with SSIM value shows in figure 8 and figure 9.

6. VI. Conclusion

A real-time face feature reshaping for video sequence is introduced which allows reshaping of specific features like eyebrow, eye, nose, lip, jaw, and chin of the face which cannot be achieved by existing morphing technique. We proposed for the first time, a real-time video morphing system without any use of an external device. Our system provides portability that can be in entertainment applications, games, film production, medical, and beautification fields. With full flexibility and good accuracy, the system allows to reshape face features in real-time. The comparison with the existing method using the SSIM index qualifies the quality of generated output. Also, the analysis of the time required for the output of various profile face and illumination condition justify the proposed system. In the future, we will make an expert system that is used in medical applications to visualize modified faces before plastic surgery.

![Fig. 1: System Diagram of Proposed System Therefore, we converted each video frame to have an equal size. The resolution of the input image allows us to detect more depth of video frames. In our system, we used 68 Primary and Secondary landmark points [33] as shown in figure 2. The landmark points are used to find the exact position of the face feature and used to reshape the features. The system extracts a set of x/y coordinates on the input face. These face landmark points are fed into the image deformation method that reshapes the face parameter as per the user requirement provided as input. The Facial feature is the lip, Right eyebrow, Left eyebrow, Right eye, Left eye, Nose, Jaw, and chin. Kazemi and Sullivan [33]proposed a One Millisecond Face Alignment with an Ensemble of Regression Trees based method used for face landmark detection. We used this method for accurate landmark detection on the user's face. We used a pre-trained facial landmark detector to](https://computerresearch.org/index.php/computer/article/download/102258/version/102258/4-Real-Time-Face-Feature-Reshaping_html/40484/image-2.png)

| Video | Response Time in Left | Response | Time | in | Response | Time | in |

| Frame | Profile Face | Front Profile Face | Right Profile Face | ||||

| 1 | 34 | 26 | 34 | ||||

| 2 | 33 | 25 | 33 | ||||

| 3 | 25 | 24 | 26 | ||||

| 4 | 26 | 25 | 24 | ||||

| 5 | 27 | 24 | 25 | ||||

| 6 | 25 | 23 | 24 | ||||

| 7 | 26 | 24 | 25 | ||||

| 8 | 24 | 25 | 24 | ||||

| 9 | 25 | 22 | 25 | ||||

| 10 | 23 | 24 | 23 | ||||

| Video | Response time in Dark | Response time in Nor- | Response | Time | in |

| Frame | Illumination | mal Illumination | Light Illumination | ||

| 1 | 48 | 26 | 27 | ||

| 2 | 23 | 22 | 21 | ||

| 3 | 25 | 23 | 25 | ||

| 4 | 25 | 24 | 24 | ||

| 5 | 29 | 29 | 23 | ||

| 6 | 27 | 27 | 23 | ||

| 7 | 30 | 25 | 25 | ||

| 8 | 28 | 24 | 29 | ||

| 9 | 24 | 27 | 24 | ||

| 10 | 28 | 30 | 24 | ||

| SSIM value Existing System Proposed System | ||

| Result 1 | 0.73 | 0.73 |

| Result 2 | 0.89 | 0.77 |

| Result 3 | 0.80 | 0.77 |

| Result 4 | 0.74 | 0.74 |