1. Introduction

he field of intrusion detection has received increasing attention in recent years. One reason is the explosive growth of the Internet and the large number of networked systems that exist in all types of organizations. The increased number of networked machines has led to a rise in unauthorized activity, not only from external attackers but also from internal sources such as disgruntled employees and people abusing their privileges for personal gain [26].Since intrusions take advantage of vulnerabilities in computer systems or use socially engineered penetration techniques,intrusion detection (ID) is often used as another wall of protection. Intrusion detection includes identifying a set of malicious actions that compromise the integrity, confidentiality, and availability of information resources.

Enough data exists or could be collected to allow network administrators to detect any violations. Unfortunately, the data is so volumes, and the analysis process so time consuming that the administrators don't have the resources to go through it all and find the relevant knowledge, save for the most exceptional situations [30].Given the nature of this problem, the possible solution is data mining approach [3], [30].

Data mining approach for intrusion detection techniques generally fall into one of two categories; misuse detection and anomaly detection. In misuse detection, each instance in a data set is labeled as 'normal' or 'intrusion' and a learning algorithm is trained over the labeled data. These techniques are able to automatically retrain intrusion detection models on different input data that include new types of attacks, as long as they have been labeled appropriately. Unlike manual intrusion detection systems, models of misuse are created automatically, and can be more sophisticated and precise than manually created signatures. A key advantage of misuse detection techniques is their high degree of accuracy in detecting known attacks and their variations. Their obvious drawback is the inability to detect attacks whose instances have not yet been observed.

Anomaly detection, on the other hand, builds models of normal behavior, and automatically detects any deviation from it, flagging the latter as suspect. Anomaly detection techniques thus identify new types of intrusions as deviations from normal usage [5]. While an extremely powerful and novel tool, a potential draw-back of these techniques is the rate of false alarms. This can happen primarily because previously unseen (yet legitimate) system behaviors may also be recognized as anomalies, and hence flagged as potential intrusions. Hybrid IDS combine bothmethods and it is better one [22].

The problem with the current researches is that they try to minimize the error rate (make the classification decision to minimize the probability of error) by totally ignoring the cost that could be incurred. However, for many problem domains, the requirement is not merely to predict the most probable class label, since different types of errors carry different costs [10].

For example of such problem in the case of computer network security include authentication, where the cost of allowing unauthorized access can be much greater than that of wrongly denying access to authorized individuals, and intrusion detection, where raising false alarms has a substantially lower cost than allowing an undetected intrusion. In such cases, it is preferable to make the classification decision that has minimum expected cost, rather than that with the lowest error probability [23].

Another very important case is, if class imbalanced datasets occurs but this happens in many real-world applications where the class distributions of data are highly imbalanced [23]. Again, it is assumed that the minority or rare class is the positive class, and the majority class is the negative class. Often the minority class is very small, such as 1% of the dataset. If most traditional (cost insensitive) classifiers are applied on the dataset, they will likely to predict everything as negative (the majority class) [33].

The intrusion data used for this research, KDD data set, which is publicly available and most widely used data set, has class distributions of training and test datasets with different distribution and each attack types has different costs. Statistically, the attacks of U2R and R2L are of the rarest, which makes them very hard to predict. On the other hand, they are the most dangerous types. Once an attacker gains the super user right or successfully remote login, disasters of the whole system are nothing but unavoidable [19].

Comparably, attacks of Probe are not that much dangerous. Although attacks of DOS (denial of service) are massive in the whole original dataset, they impose less danger. This is because the nature of denial of service attacks lies in that they are trying to initiate as many as possible connections to consume the network traffics and server CPU time [19].

Because of the above mentioned reasons cost sensitive learning which considers cost in decision making with acceptable accuracy is better solution for computer network intrusion detection. We used cost sensitive learning algorithms, cost sensitive classification tree CS-CRT and cost sensitive decision tree CS-MC4 algorithms. These algorithms use misclassification cost matrix to minimize the expected cost and for the detection of best prediction. Yet despite its importance, the topic is seldom addressed and researched.

2. II. Intrusion Detection, Cost Sensitive

Machine Learning and Performance Measure An intrusion detection system attempts to detect intrusions. In this paper, we focus on networkbased systems, i.e., network intrusion detection systems (NIDS) whose primary source of data is network traffic. In contrast, there is host intrusion detection systems (HIDS) which rely on information gathered on individual hosts. Hybrid systems are both network-based and host-based [5], [22].

3. a) Classification of intrusion detection based on different detection method i. Misuse Detection

It is also named signature-based detection, which can transform the information of attack symptom or policy disobeying into state transition-based signature or rule, and such information is stored in signature database. To judge whether or not it is attack, pretreated case data should be first compared with the signature of signature database, and those conforming to attack signature data can be judged as attack [22]. Its advantage is high detection rate and low false alarm rate for known attacks; however, its detection capacity is low for unknown detection methods, and attack database should be renewed on a regular basis.

ii. Anomaly Detection It may establish a profiles for normal behavior of users, which comes from statistics data of users in the former period; when detection is performed, the profiles is compared with actual users' data, if the offset is below threshold value, user's behavior can be considered normal, and it has no intention of attacks; if the offset is above threshold value, user's behavior can be considered abnormal [22]. Anomaly detection is based on an assumption that intruder's behavior is different from normal users' behavior. Detection rate of the method is high, and it is more likely to detect unknown attacks, but misjudgment (false positive) rate is also high.

iii. Hybrid It is also possible to include both normal and attack data in the model. Such systems are referred to be hybrid detectors. 1. Collector: Provides an interface for accessing data that is used by the detection process. For a NIDS, the primary kind of data collector is a network tap. A tap provides access to all raw network packets which cross a particular position of a network. The cost model of IDS devises the total expected cost of the IDS. The cost model depends on the detection performance of the IDS. Misclassification costs false positive (FP, the cost of misclassifying a negative instance into positive) and false negative (FN, the cost of misclassifying a positive instance into negative), and the cost of correct classification is zero. They simply assign FP as the weight to each negative instance, and assign FN as the weight to each positive instance. That is, the weight ratio of a positive instance to a negative instance is proportional to FN/FP [9], [19].

The cost of misclassifying an instance of class i as class j is C(i, j ) and is assumed to be equal to 1 unless specified otherwise(i, i ) = 0 for all i . Any classification tree can have a total cost computed for its terminal node assignments by summing costs over all misclassifications. The issue in cost sensitive learning is to induce a tree that takes the costs into account during its growing and pruning phases [19]. These misclassification cost values can be given by domain experts, or learned via other approaches. In cost sensitive learning, it is usually assume that such a cost matrix is given and known. For multiple classes, the cost matrix can be easily extended by adding more rows and more columns.

When optimizing sampling levels to improve overall cost, a logical evaluation criterion is the cost itself. Cost is calculated as follows by taking the assumption C (+|+) = C (-|-) =0.Cost = FN rate x C (-|+) + FP rate x C (+|-). Therefore, once classification occurs in the cross-validation phase, the wrapper or filter calculates the validation cost and uses this information to select optimal sampling levels. This approach is Actual negative Actual positive

4. CM (I, J)*C (I, J)

Where CM corresponds to confusion matrix, C corresponds to the cost matrix, and N represents the number of patterns tested [10].

5. IV. Data Collection and Preparation

NSL-KDD data set which is the new version of KDD Cup 99 dataset and the only publicly available [21] and the widely used data set for intrusion detection have been used for the experimental purpose. The process of data cleaning and preparation is highly dependent on the specific machine learning algorithm and software chosen and algorithms used for the machine learning task. The researcher attempted to prepare the data according to the requirements of the Tanagra which is powerful, user friendly and freely available for noncommercial purpose machine learning software [11] and according to the requirements of CS-MC4 and CS-CRT algorithms by consulting different literatures.

6. V. Training and Testing Models

The Intrusion detection models were developed using cost sensitive classification tree CS-CRT and cost sensitive decision tree CS-MC4 algorithms on full training NSL-KDD dataset using a powerful machine learning and data mining Tanagra tool and then Full testing dataset of NSL-KDD passed through the developed models to detect the intrusions and find the detection error rates, precision, false positives rate, average misclassification cost and accuracy of the detection models but for comparison of models we used mainly average misclassification cost, false positives rate and accuracy of detection.

7. VI. Experimentation

We used data mining software tool known as Tanagra version 1.4.34 available freely at [11]. The software is a GUI based software and easy to use. Tanagra is capable of classifying large volumes of data within a second depending on the speed and specification of computer processor. All experiments were performed using an Intel Core 2 Duo Processor 2.16 GHz processor with 4 GB of RAM and implemented on a Vista Windows operating system.

We have used NSL-KDD intrusion dataset which is available at [21] and it is a dataset suggested to solve some of the inherent problems of the original KDD Cup 99 dataset [13,20]. The NSL-KDD dataset has the following advantages over the original KDD Cup 99 dataset. We have used the cost matrix defined for the KDD Cup 99 Dataset [9] which is shown in Table 4.

Category Normal Probe DOS U2R R2L Normal 0 1 2 2 2 Probe 1 0 2 2 2 DOS 2 1 0 2 2 U2R 3 2 2 0 2 R2L 4 2 2 2 08. VII. Experimentation and Result

The experimentations have two major parts; the first one is experimentation on CS-MC4 and CS-CRT using all the 41 attributes and the second is experimentation on CS-MC4 and CS-CRT using information gain (IG) feature (attribute) selection method. Comparative discussions of each approaches used with the KDD 99 winner results are given.

Table 5 summarizes comparison results of detection accuracy (which refers to the proportion that a type of data is correctly classified) of normal and 4 different attacks (i.e. Probe, Dos, U2R, R2L) based on CS-MC4 and CS-CRT classifiers using 24, 30, 37, and all the attributes in comparison with KDD winner results. It is evident from this Table that:

9. Cscrt

10. Cs-mc4

11. Cscrt

12. Cs-mc4

13. Cscrt

14. Cs-mc4

15. Cscrt

16. Cs-mc4

17. Cscrt

1. For Normal: CS-MC4 classifiers outperform their CS-CRT counterparts in all cases but when only 30 attributes are used, the difference between the accuracy of CS-MC4 and CS-CRT became minimal i.e. 0.36%. For this type of class, in all the cases the accuracy of the KDD winner result is better than both CS-MC4 and CS-CRT classifiers.

2. For Probe attack: the accuracy of CS-MC4 is better than that of CS-CRT when only 24 attributes are used, but in other circumstances CS-CRT outperforms CS-MC4. For this type of attack, in all the cases the accuracy of both CS-MC4 and CS-CRT is better than the KDD winner result.

3. For Dos attack: CS-MC4 classifiers outperform their CS-CRT counterparts in all cases but when only 30 attributes are used, the difference between the accuracy of CS-MC4 and CS-CRT became minimal, i.e. 0.17%. For this type of attack, in all the cases the accuracy of both CS-MC4 and CS-CRT is better than the KDD winner result. 4. For U2R attack: the accuracy of CS-MC4 classifiers is better than their CS-CRT counterparts in all cases.

For this type of attack, in all the cases the accuracy of both CS-MC4 and CS-CRT is better than the KDD winner result except when only 24 attributes are used for CS-CRT classifier. 5. For R2L attack: accuracy of CS-MC4 classifiers is better than their CS-CRT counterparts in all cases.

For this type of attack, in all the cases the accuracy of both CS-MC4 and CS-CRT are by far better than the KDD Cup 99 winner result.

In general, it is evident from the above table that in terms of accuracy, CS-MC4 outperformed the CS-CRT. As it can be seen from the result, the feature selection method used (i.e. information gain value) did not increase the accuracy of CS-MC4 classifier. Even though CS-MC4 classifier achieved the same result using all the attributes (41 attributes) and 37 attributes (at information gain value greater than 0), the reduction of the attributes from 41 to 37 could increase the speed of the classifier and reduce the space required. CS-CRT achieved a better result when only 30 attributes (information gain value greater than and equal to 0.011) are used relative to the other cases.

So, feature selection using information again value achieved better result for CS-CRT classifier from 41 attributes to 30 attributes but for CS-MC4 classifier from 41 attributes to 37 attributes. Results which are achieved in this work are compared with the results obtained by KDD winner results as shown in table 4. As it can be seen, the accuracy of KDD Winner is only better in normal, but it is far worse than CS-MC4 and CS-CRT in U2R and R2L attacks; this might be because of the data used for this research is NSL-KDD intrusion dataset (which is new version of the original KDD cup data and it is better than the original dataset in that it Table 6 summarizes the efficiency of information gain value for feature selection and the performance comparison of CS-MC4, CS-CRT classifier and KDD winner result based on overall accuracy, false positives rate and average misclassification cost using 24, 30, 37 and all the attributes for 5 attack classes on NSL-KDD dataset. And in all the cases, the Average misclassification cost of both CS-MC4 and CS-CRT is better than the KDD winner result. 4. Attribute reduction using information again value achieved better result for CS-CRT classifier from 41 attributes to 30 attributes, from 94.2% to 98.4% accuracy, from 7.1% to 1.7% false positive rate, and average misclassification cost from 0.0895 to 0.0335; but for CS-MC4 classifier it only reduce the attribute from 41 attributes to 37 attributes.

18. VIII. Conclusions

This paper focuses on using cost sensitive learning techniques to existing data mining algorithms to deal with cost of different types of attacks in intrusion detection while at the same time reducing the false positives rate. The cost issue is widely ignored in the intrusion detection research. As a result, most of the research projects tried to minimize the false positives rate which may not reflect real-world scenario of dealing with ever increasing different types of attacks with different costs; and an important consideration is the fact that raising false alarms carries a significantly lower cost than not detecting attacks.

For comparison results of CS-MC4, CS-CRT and KDD 99 winner result, it was found that CS-MC4 is superior to CS-CRT in terms of accuracy, false positives rate and average misclassification costs. CS-CRT is superior to KDD winner result in accuracy and average misclassification costs but in false positives rate KDD winner result is better than both CS-MC4 and CS-CRT classifiers.

This paper proposed learning approach for network intrusion detection that performs feature selection method using information gain by selecting important subset of attributes. The performance of the proposed approach on the NSL-KDD intrusion detection dataset achieved a better accuracy from 94.2% to 98.4% for the CS-CRT classifier. It also reduced the false positives for the CS-CRT algorithm from 7.1% to 1.7%, and the average misclassification costs from 0.0895 to 0.0335; but for the CS-CM4 algorithm, it only reduced the attribute from 41 to 37 attributes.Even though feature selection method could not increase accuracy or reduce false positive rate and average misclassification cost for CS-MC4, it could increase the speed of the classifier and reduce the computation space required. The experimental results have manifested that feature (attribute) selection improves the performance of IDS.

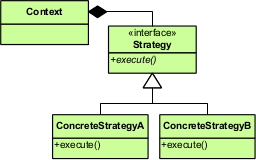

![Figure 2 : NIDS architecture III. Cost Sensitive Machine Learning Costs are central to statistical decision theory but cost sensitive learning received only modest attention before [19]. Cost sensitive learning is a type of learning in data mining that takes the misclassification costs (and possibly other types of cost) into consideration. The goal of this type of learning is to minimize the total cost. The key difference between cost sensitive learning and cost insensitive learning is that cost sensitive learning treats the different misclassifications differently. Cost insensitive learning does not take the misclassification costs into consideration. The goal of this type of learning is to practice a high accuracy of classifying examples into a set of known classes. Cost sensitive learning is an extensively used practice in data mining, which assigns different levels of misclassification penalty to each class. Cost sensitive technique has been incorporated into classification algorithms by taking into account the cost information and trying to optimize the overall cost during the learning process. a) Cost Models](https://computerresearch.org/index.php/computer/article/download/139/version/100876/1-A-Cost-Sensitive-Machine-Learning_html/15154/image-4.png)

| b) Performance Measures | |

| detection rate, low false alarm rate and lower average | |

| misclassification cost. The followings are commonly | |

| used performance measures | |

| i. | Error Rate |

| The error rate, which is only an estimate of the | |

| true error rate and is expressed to be a good estimate, if | |

| the number of test data is large and representative of | |

| the population and is defined as | |

| [68]: | |

| 4. The number of records in the training and testing | |||

| sets are reasonable, which makes it affordable to | |||

| run the experiments on the complete set without the | |||

| need to randomly select a small portion. | |||

| 5. Statistical observations, one of the most important | |||

| deficiencies in the KDD dataset is the huge number | |||

| of redundant records, which causes the learning | |||

| algorithms to be biased towards the frequent | |||

| records and thus prevent them from learning | |||

| unfrequent records which are usually more harmful | |||

| to networks such as U2R and R2L attacks. | |||

| Table2 and Table3 shows the statistics of the | |||

| redundant records in the KDD Cup 99 training and | |||

| testing datasets. | |||

| Original | Records | Distinct Records Reduction Rate | |

| Attacks | 3,925,650 | 262,178 | 93.32% |

| Normal | 972,781 | 812,814 | 16.44% |

| Total | 4,898,431 | 1,074,992 | 78.05% |

| Original | Records Distinct Records | Reduction Rate | |

| Attacks | 250,436 | 29,378 | 88.26% |

| Normal | 60,591 | 47,911 | 20.92% |

| Total | 311,027 | 77,289 | 75.15% |

| Normal | DOS | R2L | Probe | U2R |

| Cs- | ||||

| mc4 |

| Overall accuracy (%) | False positives rate (%) | Average Misclassification Cost | |||

| CS-MC4 | CS-CRT | CS-MC4 | CS-CRT | CS-MC4 | CS-CRT |

| All attributes 98.9 | 94.2 | 1.3 | 7.1 | 0.0199 | 0.0895 |

| 24 attributes 98.8 | 94.1 | 1.4 | 6.4 | 0.0232 | 0.0982 |

| 30 attributes 98.8 | 98.4 | 1.4 | 1.7 | 0.0201 | 0.0335 |

| 37 attributes 98.9 | 94.1 | 1.3 | 7.2 | 0.0199 | 0.0887 |

| KDD winner 92.7 | 0.55 | 0.2331 | |||

| It is evident from above table that: | |||||

| 1. Overall accuracy of CS-MC4 classifiers is better | |||||

| than their CS-CRT counterparts in all cases. And in | |||||

| all the cases, the overall accuracy of both CS-MC4 | |||||

| and CS-CRT is better than the KDD winner result. | |||||

| 2. False positives rate of CS-MC4 classifiers are better | |||||

| than their CS-CRT counterparts in all cases. And in | |||||

| all the cases, the false positive rate of the KDD | |||||

| winner result is better than both CS-MC4 and CS- | |||||

| CRT classifiers. | |||||

| 3. Average misclassification cost of CS-MC4 classifiers | |||||

| is better than their CS-CRT counterparts in all cases. | |||||