1. Introduction

ccording to the World Health Organization (WHO), the number of people with diabetes had quadrupled since 1980. Prevalence is increasing worldwide, particularly in low-and middle-income countries. It is estimated that medical costs and lost work and wages for people diagnosed with diabetes is $327 billion yearly and twice as much as those who do not have diabetes (CDC, 2018). About 422 million people worldwide have the disease. It can lead to serious complications in any part of the body such as kidney disease, blindness, nerve damage, and heart disease (Temurtas et al., 2009),and increases the risk of dying prematurely -diabetes is the seventh leading cause of death worldwide.

There are many factors to analyze to diagnose diabetes in a patient which makes the physician's job difficult. Thus, to save time, cost, and the risk of an inexperienced physician, classification models may be built to help predict and diagnose diabetes based on previous records (Polat et al., 2008). The use of machine learning in medicine has increased substantially. With the exponential growth of big data, manual efforts to analyze such data are impossible, therefore, automated techniques such as machine learning are used. Machine learning is defined as having the ability for a system to learn on its own, by extracting patterns from large raw data (Goodfellow et al., 2016).

The General Regression Neural Network Oracle (GRNN Oracle), developed by Masters et al. in 1998, combines the predictions of individually trained classifiers and outputs one superior prediction by determining the error rate for each classifier form a set of observations in order to assign weights to favor classifiers with lower error rates. The final prediction for an unknown observation is calculated by summing each classifier's prediction for that unknown observation multiplied by the classifier's weight; the classifiers with lower error rates have greater influence on the final prediction.

Because of the strong capabilities of the oracle, it has been enhanced to consist of two GRNN Oracles; one within the other. First proposed by Bani-Hani (2017), the first oracle is created through its own combination of algorithms and acts now as a classifier as it has its own predictions and error contribution to a set of unknown observations. It is then combined with other classifiers to create a new, outer oracle that has been named the Recursive General Regression Neural Network Oracle (R-GRNN Oracle). This study is applied on the Pima Indians Diabetes dataset where Genetic Algorithm (GA) is used for feature selection and hyperparameter optimization, and the proposed classifier, the Recursive General Regression Neural Network Oracle (R-GRNN Oracle), is applied along with seven other classifiers, namely Support Vector Machine (SVM), Multilayer Perceptron (MLP), Random Forest (RF), Probabilistic Neural Network (PNN), Gaussian Naïve Bayes (GNB), K-Nearest Neighbor (KNN), and the GRNN Oracle, for the prediction and diagnosis of diabetes. The R-GRNN Oracle was able to achieve the highest accuracy and AUC (area under the Receiver Operating Characteristic (ROC) curve) performance metrics in comparison to the other classifiers used.

The remainder of this paper is organized as follows: Section 2 presents the related work regarding this study. Section 3 explains the methodology adopted in this study. Section 4 shows the experimental analysis and results. Section 5 presents the discussion. And Section 6 presents the conclusion and future work.

2. II.

3. Related Work

Prediction models are vastly implemented in clinical and medical fields to support diagnostic decision-making (Zheng et al., 2015 Many other studies have been carried out on the same dataset, however, due to reporting training accuracies rather than testing and validation accuracies, they have been excluded from the literature review for several reasons including, and most importantly, overfitting, as overfitting generates higher accuracies due to fitting the model too perfectly to the training set making the model not generalized enough. The other studies that have been excluded are those that obtained high accuracies but did not mention whether they obtained it from a training set or a testing or validation set making the results questionable. It is worthy to note that this study applied 4-fold cross validation to train each classifier and were tested on a validation subset that did not take part in neither the training nor testing steps.

4. III.

5. Methodology

Six individual classifiers were used in this research: SVM, MLP, RF, PNN, GNB, and KNN, in which some were used to create the GRNN Oracle, and some were combined with the first oracle to create the R-GRNN Oracle. The software and language used for this study was Python 3.6 and the hardware specifications were Intel® Core? i7-8750H CPU @ 2.20GHz with 32.0 GB RAM. a) Individual Classifiers Support Vector Machine: SVM is a statistical learning method proposed by Vapnik (1995). It is a widely used supervised machine learning algorithm used for both classification and regression. SVM works by finding the hyperplane that maximizes the margin between the classes in the feature space, as seen in Figure 1. Support vectors are observations that help dictate the hyperplane. It classifies new samples based on which side of the boundary they are located on. Multilayer Perceptron: MLP is a feed forward artificial NN that is a modification of the standard linear perceptron. It is an algorithm that does not require a linear relationship between the independent variables and the dependent variable as it is able to solve problems that are not linearly separable through the use of activation functions located in each node. An MLP consists of an input layer, a hidden layer(s), and an output layer. It is a supervised machine learning algorithm that exploits back propagation to train itself to optimize the weights of each edge connecting two nodes. It is the most frequently used NN (Hossain et al., 2017) and is widelyused for classification, regression, recognition, prediction, and approximation tasks. Figure 2 illustrates an example of anMLP with one hidden layer with five hidden nodes. Gaussian Naïve Bayes: GNB is a supervised learning algorithm that is widely used for classification problems because of its simplicity and accurate results (Farid et al., 2014). It uses Bayes theorem as its framework (Griffis et al., 2016) and has strong independence assumptions between the independent variables. One important advantage of GNB is that it could estimate the parameters necessary for classification by training on a small training set.

K-Nearest Neighbor: KNN is a non-parametric, lazy learning method for classification and regression tasks (Zhang, 2016). ??is a user-set parameter that represents the number of known observations closest to the unknown observation mapped out in the feature space. For classification tasks, the class of the new observation is based on the majority class surrounding it; ?? is typically an odd number. For regression tasks, the new observation is taken as the average of its ?? neighbors.

6. b) Optimization Algorithms

Genetic Algorithm: GA is a population-based metaheuristic developed by John Holland in the 1970s (Holland, 1992)

7. c) GRNN Oracle

The GRNN Oracle combines the predictive powers of several machine learning classifiers that were trained independently to form one superior prediction (Li, 2014). It determines the error rate for each classifier involved in the oracle in order to assign weights to favor classifiers with lower error rates. The final prediction for an unknown observation is calculated by summing each classifier's prediction for that unknown observation multiplied by the classifier's weight.

The steps involved in predicting a class (output) for a single observation are: first, each classifier (??) is trained on a training subset of the data and tested on another subset to obtain predictions for the observations. Second, each prediction obtained from the previous step (probability of belonging to each class) for each observation is compared to its actual prediction (actual class) and the Mean Squared Error (MSE) is calculated through Formula 1:

?????????? ??,?? = ? ????? ?? ? ???? ?? ,?? ? 2 /??????_?????????????? ?????? _?????????????? ?? =1(1)where ?????????? ??,?? is the mean squared error of a known observation (??) from classifier (??), ??????_?????????????? is the total number of classes, ???? ?? is the actual probability of the known observation (??) for being class (??) and ???? ?? ,?? is the predicted probability of being class (??) from classifier (??).Third, for a given unknown observation in the validation set (an observation that needs to be predicted), the distance between the observation and all the known samples in the testing set is calculated, and each known observation has a particular weight for the unknown observation. The distance is calculated using Formula 2 and the weight is calculated using Formula 3.

??(?? ?, ?? ? ?? ) = 1 ?? ? ((?? ?? ? ?? ???? )/?? ?? ) 2 ?? ?? =1(2)

??????????? ?? = ?? ???(?? ?,?? ? ?? )(3)where ?? ? represents the vector of features belonging to the unknown observation, [feature 1, feature 2, ?, feature ??], ?? ? ?? is the feature vector for the known observation, ?? ?? is the ??-th feature of the unknown observation, ?? ???? is the ??-th feature of the known observation, ?? ?? is an adjustable sigma parameter for the ??-th feature and ?? is the total number of features. ?? ?? is the weight (trust) of classifier (??) on the prediction of the unknown observation. Fourth, for the unknown observation, for each classifier (??), the predicted squared error is obtained through the MSE and weight of each known observation (Formula 4).

?????????? ?? (?? ?) = (? ?????????? ??,?? * ??????????? ?? ?? ??=1

)/ ? ??????????? ?? ?? ??=1

(4)

Fifth, each classifier (??) has an amount of trust for the final prediction of the unknown observation where the higher the weight, the more influence it has on the final prediction of the unknown observation (Formula 5).

?? ?? = (1/?????????? ?? )/(? 1/?????????? ?? ?? ??=1)

(5)

? ?? ?? ?? ??=1 = 1 (6)where?? is the total number of classifiers, and ?? indicates classifier ??. The sum of ?? ?? for all classifiers (??) equals one (Formula 6). Lastly, through the amount of error each classifier (??) contributes, their trust/weight is multiplied by the unknown observation's prediction and summed up to form the final prediction for that particular unknown observation (Formula 7).

?? ? = ? ?? ?? * ?? ?? ?? ??=1(7)where?? ? is the prediction of the unknown observation outputted by the GRNN Oracle represented as a class membership vector and ?? ?? is the predicted class membership vector for the unknown observation given by classifier (??).

8. d) Recursive GRNN Oracle

The best combination of classifiers that were trained and tested individually and independently was used to make the first oracle. By having predictions outputted from the oracle, it now acts as any other machine learning classifier would. The best combination of classifiers that would enhance the performance of the first GRNN Oracle is selected and this selected combination, including the first oracle, creates the second oracle, the R-GRNN Oracle. The accuracy, AUC, sensitivity, and specificity of its final predictions are taken, along with the same performance metrics of the inner GRNN Oracle and the individual classifiers for the final comparison.

9. IV.

10. Experimental Analysis and Results

11. a) Dataset Description

The Pima Indians Diabetes dataset was used in this study where it was originally a study conducted by the National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK) on the Pima Indian population near Phoenix, Arizona, in 1965 (Smith et al., 1988). There is a total of 768 observed patients where 268 of them have diabetes, which indicates the imbalanced property of the dataset. In this dataset, there are eight independent variables (features) and one dependent variable (outcome: diabetes or no diabetes), as presented in Table 1. More detailed attributes distributions and statistical analysis are further shown in Figure 3, where the color orange signifies patients who have diabetes. All patients recorded are females at least 21 years old of Pima Indian heritage.

12. b) Data Preprocessing

The first step taken in the data preprocessing phase was excluding outliers as they can drastically affect the model's predictive ability. Any point that was three standard deviations (3??) away from the mean of any given feature was excluded. The original dataset had 768 patients, and after outlier removal, the new dataset contained 709 patients where 243 of them had diabetes. The next step was to correct the imbalanced property of the data. Since only 243 patients from the remaining 709 had diabetes, this is a class imbalance problem where those with diabetes only make up 34% of the data. Thus, an oversampling approach was applied to the minority class. Oversampling was favored over under sampling because the dataset's size concerning the number of observations was already small, and concerning how the R-GRNN Oracle works, it would not be a wise approach to remove observations, as the recursive oracle requires the dataset to be relatively large for the data subsets to be drawn. After this step, a normalization technique was applied to each independent variable in which the variable was scaled to a range between 0 and 1; 0 indicating the lowest value in a particular feature and 1 indicating the highest. The formula of normalization is given in Formula 8 where min ?? ?? is the minimum value in the set of values in feature ?? ?? , and max ?? ?? is the maximum value in feature ?? ?? . This is performed to ensure each feature has an equal weight so that no one feature would outweigh another before the creation of the prediction model.

??? ?? = (?? ?? ? min ?? ?? )/(max ?? ?? ? min ?? ?? ) (8)13. c) Hyperparameter Optimization

The hyperparameters in any algorithm contributes greatly to the output of the model, therefore, determining the optimal (or near-optimal) combination of hyperparameters would yield the best result. For example, some of a NN's hyperparameters include the number of hidden layers a user sets and the number of hidden nodes in each hidden layer. Hyperparameters are defined as the properties of a model that the user can set the value to. They are different from parameters as parameters are changed internally by the model itself during training rather than set by the user before the training process. An example to a parameter is the weights of a NN, as they are adjusted through back propagation using Gradient Decent (or any other optimizer) rather than by the user.

GA was utilized to optimize the performances of the SVM and MLP, while GS was applied on KNN and RF. The reason that GS was used instead of GA was that both KNN and RF have one parameter of interest: the number of neighbors and the number of DTs, receptively.

Therefore, no combinations of hyperparameters are needed which makes it a straightforward exhaustive search. SVM and MLP however have more than one hyperparameter that need to be optimized simultaneously, which also include continuous values, this is why GA is used.

Formula 9 shows the fitness function (????) used to evaluate each chromosome (each solution). They were evaluated based on their prediction accuracies, where ???? is the true positive rate, in which it indicates those who actually have diabetes and were predicted to have diabetes, ???? is the true negative rate where those who do not have diabetes were predicted not having diabetes, ???? is the false positive rate in which those without diabetes were falsely predicted that they do have diabetes, ???? is the false negative rate, where patients have diabetes but were falsely predicted that they don't, and ?? is the number of folds required for the K-fold cross validation, in which it was set to four for this study.

???? = 1 ?? ?? ????+???? ????+????+????+???? ?? ??=1 ? (9)The hyperparameters that were included in this study relating to SVM were ?? and gamma (??), where both take on continuous values, while MLP's hyperparameters included the learning rate (??), momentum, the number of hidden layers, the number of hidden nodes in each hidden layer, and the solver, where ?? and momentum are continuous, the number of hidden layers and nodes are integers, and the solver is categorical. Figure 4 and Figure 5 show the encoding (genotype) for the SVM and MLP parameters, respectively, where each continuous hyperparameter was encoded with a binary chromosome with a length of 15 alleles. SVMs can handle nonlinear classifications through transforming inputs into feature vectors with the use of kernels. The SVM kernel set for this study is the Radial Basis Function (RBF) in which it is a popular Gaussian kernel function. Some of RBF's greatest advantages are its high accuracy, its fast convergence, and its applicability in almost any dimension. ??is a regularization hyperparameter that determines how correctly the hyperplane between the classes separates the data. It controls the trade-off between model complexity and training error (Joachims, 2002). ?? has a serious impact on the classification accuracy as it defines the influence of each training observation (Tuba and Stanimirovic, 2017), with lower values meaning "far" and higher values meaning "close". It can be thought of as the inverse of the radius of influence of observations selected by the model as support vectors. Figure 6-A shows SVM's accuracy achieved by GA in each of the 100 generations run.

With MLP, the activation function set in this study was the Rectified Linear Unit (ReLU). Activation functions are operations which map an output to a set of inputs. They are used to impart non-linearity to the network structure (Acharya et al, 2017). Because ReLU returns a positive number, i.e. ?????? (??, 0), the two major advantages of it are sparsity and the reduced likelihood of the "vanishing gradient" problem, as adding as many hidden layers as one would like would not cause the gradient multiplication to reach a very small number that it will likely "vanish" with more layers to add. Solvers in NNs train and optimize the weights connecting the nodes between two-adjacent layers. The two solvers considered for this study are Stochastic Gradient Decent (SGD) and Adam, a variant of SGD. The other two important hyperparameters are ??and momentum. ?? controls how fast the network learns during training andmomentum helps to converge the data (Acharya et al, 2017). They can be thought of the stepping size and direction in the search space. Feature selection plays an important role in classification for several reasons (Luukka, 2011). First, it can simplify the model's complexity which helps reduce computational cost, and when the model is taken for practical use fewer inputs are needed. Second, by removing redundant features from the dataset one can also make the model more transparent and more comprehensible, providing better explanation of suggested diagnosis, which is an important requirement in medical applications. Feature selection process can also reduce noise in which it may enhance classification accuracy.

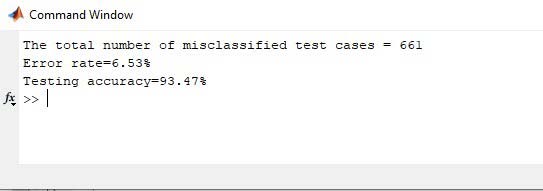

GA was applied for feature selection through SVM and its optimized hyperparameters from the previous step. The solution representation for feature selection was embodied by a chromosome of eight binary values (i.e. 0's and 1's). An allele of the value 0 indicates that feature ?? was not included while an allele of 1 indicated that it was included; ?? is the ????? feature in the dataset. To explain further, Figure 7 illustrates an example where the encoding of the selected features #1, #2, #3, and #6, out of eight featuresis shown. Chromosomes with a subset of features selected are then evaluated based on their accuracy. The subset of features that attained the highest accuracy was selected for further analysis. Formula 9 was also used as the fitness function for chromosome evaluation. For the first GRNN Oracle (the inner oracle), the classifiers fed into it were SVM, GNB, and RF. The accuracy and AUC for SVM were 79.72% and 85.79%, respectively. GNB had 79.09% and 85.56%, respectively, and RF at 77.50% and 81.15%. The performance of the first oracle had an accuracy of 79.54%, AUC of 85.16%, sensitivity of 59.60%, and specificity of 88.51%. MLP, PNN, and KNN were not chosen because of their inferior performances when compared to the other models. All models were run 15 times and the average of the performance metrics were taken.

For the R-GRNN Oracle, the first GRNN Oracle, which now acts as a classifier with its own predictions, was combined with SVM. Since SVM had a better performance than others, itwas chosen as a match with the first oracle to create the second oracle. Figure 8 illustrates the classifiers being fed into each one of the two oracles. The first oracle achieved an accuracy of 79.54%, however, it was surpassed by SVM (79.72%), but the recursive model had the highest accuracy at 81.14% and highest AUC at 86.03%. Although it was able to reach the highest sensitivity too (63.80%) in comparison to the rest, MLP had the highest sensitivity (89.71%), where the recursive model came in third with 89.14% after MLP and SVM. However, since detecting TPs is of great importance (those who have diabetes), the sensitivity metric, where the R-GRNN was the highest, has a higher significance than specificity. Table 2shows the accuracy, AUC, sensitivity, and specificity of all the classifiers: six individual classifiers (performing on their own), the GRNN Oracle, and the R-GRNN Oracle. The performances can also be seen in Figure 9 and Figure 10. Figure 9 shows the recursive model's 15 runs where the best, average, and worst performance were recorded, 86.47%, 81.14%, and 76.15%, respectively. It is worthy to mention that the dataset was shuffled each time the classifiers were run to ensure the robustness of the model, as no matter how it the data was shuffled, it always yielded better performance than the rest of the classifiers. Shuffling the data is the reason behind the high variation seen in Figure 9. Also, as a reminder to what was mentioned earlier, 4-fold cross validation was applied to train and test the models, but the actual Year 2 019 ( ) D validation of each model was applied on a validation subset that was not involved in neither the training nor testing steps of each model.

14. Discussion

While the accuracy of the proposed model was not the highest in the literature, it still came in third when compared to all the publications studied (Table 3). It also bested the traditional oracle, SVM, MLP, RF, PNN, GNB, and KNN. However, as a slight remark, the studies did not confirm whether their accuracies were from conducting several runs and taking the average or not. As in this study, the highest accuracy achieved by the recursive model was 86.47%; one could simply report it as the highest achieved, therefore, it is wise if several runs are conducted and the average was taken.

15. Conclusion and Future Work

This study presented the R-GRNN Oracle and was applied on the Pima Indians Diabetes dataset. It was applied along with seven other classifiers in which their final performances were compared. The other classifiers included are the traditional GRNN Oracle, SVM, MLP, RN, PNN, GNB, and KNN. GA was used to optimize the hyperparameters of SVM and MLP, and GS was used on RF and KNN. The models were run 15 times and the dataset was shuffled each run to ensure robustness. 4-fold cross validation was adopted as the validation method. Compared to the other models, the recursive oracle achieved the highest accuracy, AUC, and sensitivity at 81.14%, 86.03%, and 63.80%, respectively. It, however, came in third for specificity at 89.14% where optimized MLP had the highest at 89.71%.

Future research may include applying feature selection and hyperparameter optimization simultaneously rather than applying feature selection based on the optimized hyperparameters from all the features. It can also include using other metaheuristics, such as Particle Swarm Optimization (PSO) for hyperparameter optimization.

16. References Références Referencias

| achieve the highest accuracy of 78.4% using a two-layer | |

| MLP. A hybrid of Artificial Neural Network (ANN) and | |

| Fuzzy Neural Network (FNN) was proposed by | |

| Kahramanli and Allahverdi in 2008. Their approach | |

| resulted in an accuracy of 84.2%.Lekkas and Mikhailove | |

| (2010) applied Evolving Fuzzy Classification (EFC) to | |

| two datasets including Pima Indians Diabetes dataset. | |

| They were able to reach an accuracy of 79.37%.Miche et | |

| al. (2010) presented the Optimally Pruned Extreme | |

| Learning Machine (OP-ELM) and compared its | |

| performance to a MLP, SVM, and Gaussian Process | |

| (GP) on several regression and classification datasets. | |

| Regarding the dataset concerning this study, the GP | |

| had the highest accuracy among the classifiers tested | |

| with an accuracy of 76.3%. Huang et al. (2004) was able | |

| to achieve an accuracy of 77.31% using SVM, although | |

| their paper proposed an algorithm called Extreme | |

| Machine Learning (EML). Kumari and Chitra (2013) used | |

| SVM and obtained an accuracy of 78.2%. Al Jarullah | |

| (2011) also found the accuracy to be 78.2% using | |

| Decision Trees (DTs). Bradley and Mangasarian (1998) | |

| applied Feature Selection via Concave (FSC), SVM, and | |

| Robust Linear Program (RLP) in which the RLP had the | |

| highest accuracy on the Pima Indian Diabetes dataset at | |

| 76.16%. Using a novel Adaptive Synthetic (ADASYN) | |

| sampling approach, He et al. (2008) achieved an | |

| accuracy of 68.37%.?ahan et al. (2005) proposed a new | |

| artificial immune system named Attribute Weighted | |

| Square Support Vector Machine (LS-SVM) for the prediction of diabetes through Generalized Discriminant Analysis (GDA). Park and Edington (2001) applied sequential multi-layered perceptron (SMLP) with back propagation learning on 6,142 participants. The early detection of diabetes type II was conducted by Zhu et al. in 2015 in which they proposed a dynamic voting scheme ensemble. Thirugnanam et al. (2012) adopted techniques such as fuzzy logic, Neural Network (NN), and case-based reasoning as an individual approach (FNC) for the diagnosis of diabetes. Regarding the dataset used in this study, the | Artificial Immune System (AWAIS) in which they attained a classification accuracy of 75.87%.Luukka (2011) used Similarity-Based (S-Based) classifier with fuzzy entropy measures as a feature selection method and reached an accuracy of 75.97%. Using Extreme Gradient Boosting (XGBoost), Christina et al. (2018) achieved 81% accuracy. Ramesh et al. (2017) used deep learning, more specifically Restricted Boltzmann Machine (RBM), on the dataset with 81% accuracy. Vaishali et al. (2017) applied GA for feature section with a Multi Objective Evolutionary Fuzzy (MOEF) classifier and obtained an accuracy of 83.04%. |

| Pima Indian Diabetes dataset, various studies used the | |

| dataset to create prediction models for the prediction | |

| and diagnosis of diabetes. Kayaer and Yildirim (2003) | |

| applied an MLP, Radial Basis Function (RBF), and a | |

| General Regression Neural Network (GRNN) on the | |

| Pima Indian Diabetes dataset. Their highest accuracy | |

| was achieved by the GRNN at 80.21%. Carpenter and | |

| Markuzon(1998) applied several techniques on the | |

| dataset including, but not limited to, KNN, Logistic | |

| Regression (LR), the perceptron-like ADAP model, | |

| ARTMAP, and ARTMAP-IC (named for instance counting | |

| and inconsistent cases), in which the ARTMAP-IC | |

| obtained the highest accuracy at 81%. Bradley (1997) | |

| also used various classifiers on the dataset where the | |

| author's main purpose was to assess the use of the | |

| AUC as a performance metric. The author was able to |

| . It is a widely used optimization |

| technique inspired by nature, more specifically, |

| evolution and survival of the fittest. It finds solutions |

| throughout the search space using two main operators: |

| crossover and mutation. Every solution is represented |

| as a chromosome with several alleles encoded with |

| genetic material that measure the fitness value of the |

| Description | Type | |

| X1 No. of Pregnancies | Discrete | |

| X2 Plasma Glucose Concentration | Continuous | |

| X3 Diastolic Blood Pressure | Continuous | |

| X4 Skin Thickness | Continuous | |

| X5 2-hr Serum Insulin | Continuous | |

| X6 BMI | Continuous | |

| X7 Diabetes Pedigree Function | Continuous | |

| X8 Age | Continuous | |

| Y | Outcome: Diabetes/No Diabetes | Discrete |

| Accuracy | AUC | Sensitivity | Specificity | |

| SVM | 79.72 | 85.79 | 58.43 | 89.31 |

| MLP | 76.88 | 80.75 | 49.11 | 89.71 |

| RF | 77.50 | 81.15 | 57.11 | 86.65 |

| PNN | 71.03 | 75.54 | 61.43 | 75.24 |

| GNB | 79.09 | 84.56 | 60.44 | 87.58 |

| KNN | 76.59 | 80.77 | 58.72 | 84.53 |

| GRNN O. | 79.54 | 85.16 | 59.60 | 88.51 |

| R. GRNN O. | 81.14 | 86.03 | 63.80 | 89.14 |

| Method | Accuracy |

| voice | data. | In Advanced | Communication | |||

| Technology (Disease Neuroimaging | Initiative. | (2017). | ||||

| Classification of Alzheimer's disease and prediction | ||||||

| of | mild | cognitive | impairment-to-Alzheimer's | |||

| conversion from structural magnetic resource | ||||||

| imaging using feature ranking and a genetic | ||||||

| algorithm. Computers in biology and medicine, 83, | ||||||

| 109-119. | ||||||

| 7. Belgiu, M., & Dr?gu?, L. (2016). Random forest in | ||||||

| remote sensing: A review of applications and future | ||||||

| directions. ISPRS Journal of Photogrammetry and | ||||||

| Remote Sensing, 114, 24-31. | ||||||

| 8. Bhardwaj, A., & Tiwari, A. (2015). Breast cancer | ||||||

| diagnosis using genetically optimized neural | ||||||

| network | model. Expert | Systems | with | |||

| Applications, 42(10), 4611-4620. | ||||||

| 9. | ||||||