1. Introduction

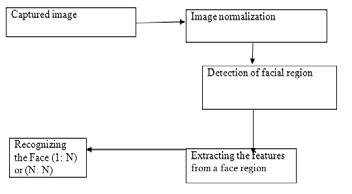

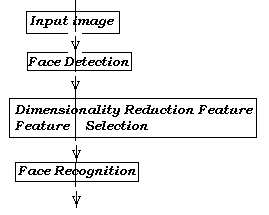

ace recognition is the mainly demanding work of the explore persons of the year of 1990's. The researchers gave acceptable results for the motionless images i.e., Images are in use under the forbidden conditions. If the image enclose the problems like elucidation, pose variation, aging, hair enclosure then the concert of the recognition progression leads to poor. Most of the researchers are absorbed on the real time submission. Many reviews are carried out on the issue of face recognition [9][63] [19] [19] [49][20] they identify various existing method for feature extraction and the face acknowledgment process. Generally face acknowledgment is classified as the procedure of face detection, characteristic extraction and face acknowledgment. Image preprocessing work as eradicate the background information and normalize the image of revolution, scaling, resizing of the unique image is carried out before the face recognition process. The face recognition is to detect the face of the standardize image, then the feature mining process is used to extract the skin from the detected face and lastly the face recognition procedure is to recognize the face contrast with a face database which is previously stored [9][63] [19] [19][49] [20]. Figure 1 denotes the procedure of face recognition. It is not possible to directly deal with raw information while the quantity of information is increased. Dimension diminution is the task to solve the above difficulty of extracting the prepared information and remove the redundant information. If the training images are augmented then the matrix of image also augmented then it is called as a difficulty of "Curse of dimensionality" which is resolved by dimensionality decline techniques [15] states that there two types of dimensionality decline techniques as linear and nonlinear dimensionality decline. The linear dimensionality diminution techniques are PCA, LDA, LPP, etc. And the nonlinear dimensionality methods are ISOMAP, LLE, and so on.

The aim of this paper is to give emerging procedure for the dimensionality decline in linear as well as nonlinear methods. It can be arranged as chase, section 2 contains the in sequence about the dimensionality decline. Section 3 have the the current state of the art in face recognition methods that are using using Component Analysis and section 4 enclose the conclusion of this paper.

2. II.

3. Dimensionality reduction techniques a) Overview

The most significant problem in face acknowledgment is the curse of dimensionality difficulty. The methods are useful to condense the dimension of the considered space. When the system starts to commit to memory the high dimensional information then it causes over fitting difficulty and also computational density becomes the important task. This curse of dimensionality difficulty is reduced by dimensionality decrease techniques [15]. The author [66] says that the various methods exist for resolving the problem of curse of dimensionality. Out of those techniques some are linear methods and others are nonlinear. Linear technique is to transform statistics from high dimensional subspace into small dimensional subspace by linear map but it fails to work on the nonlinear statistics structure where as non linear methods are easily worked on the compound nonlinear statistics structure. Compared to linear methods, nonlinear methods are very capable while processing the problematic image like hair addition, lighting state and so on. Principal constituent Analysis (PCA), Linear Discriminant Analysis (LDA) and Locality protect Projections (LPP) are some accepted linear methods and nonlinear methods include Isometric Mapping (ISOMAP) & Locally Linear Embedding (LLE)

According to the writer [93], Feature range is to find a subset of the original variables. Two approaches are filtered (e.g. Information gain) and wraps (e.g. Genetic algorithm) approaches. It occurs sometimes that data examination such as decay or classification can be done in the reduced space extra accurately than in the unique space. Quality extraction is relay a mapping of the multidimensional space into a liberty of fewer dimensions. This means that the unique feature space is transformed by concern a linear transformation. The brief prologue of feature extraction techniques is illustrated in the next section.

4. b) Linear Feature Extraction of Dimensionality Reduction Techniques

Usually the face acknowledgment process is divided into 3 areas such as Holistic way use the unique image as an input for the face acknowledgment system. The examples of holistic methods are PCA, LDA, and an ICA and so on. In a Feature based way, the local characteristic point such as eye, nose, and mouth are first taken out, then it will be sent to the classifier. Finally, a cross method is used to identify both the local feature and whole face region [9][63] [19][19][49] [20].

In Dimensionality decrease, Feature removal is an important task to collect the set of features from a picture. The feature alteration may be a linear or nonlinear mixture of original features. This review provides some of the significant linear and nonlinear methods are listed as follows.

i. Principal Component Analysis (Pca) PCA is one of the well-liked technique for both dimensionality decrease and face acknowledgment since 1990's. Eigen faces [17] built with the PCA technique is introduced by M. A. Turk and A. P. Pentland. It is a holistic move toward where the input image is straight used for the process. PCA algorithm can be used to discover a subspace whose basis vectors marks to the maximum variation directions in the original n dimensional freedom. PCA subspace can be used for appearance of data with minimum error in renovation of original data. More survey papers are providing the information for PCA techniques [9] ii. Linear Discriminant Analysis (Lda) LDA is one of the most famed linear techniques for dimensionality reduction and data organization. The main objective of the LDA consists in the judgment a base of vectors providing the finest discrimination among the classes, trying to exploit the between-class difference, minimize the within-class ones by using spread matrices. It also suffers from the small sample size trouble which exists in higher dimensional pattern acknowledgment task where the number of available models is smaller than the dimensionality of the samples. D-LDA, R-LDA, and KDDA are variations of LDA. This technique also discusses in more survey papers [20] iii. Singular Value Decomposition (Svd) SVD is a significant factor in the field of signal dispensation and statistics. It is the best linear dimensionality decrease technique based on the covariance medium. The main aim is to reduce the dimension of the information by finding a few orthogonal linear combinations of the original variables with the largest variation [66]. Most of the researches have also used this technique for face gratitude.

5. iv. Independent Component Analysis (Ica)

ICA is a geometric and computational technique for informative the hidden factors that underlie sets or chance variables, measurements, or signals. ICA is apparently related to principal component analysis and factors examination. The ICA algorithm aims at finding S component as self-governing as possible so that the set of experimental signals can be spoken as a

( D D D D ) F 2012Year linear combination of statistically independent components. It uses cosine measures to perform the covariance matrix and also it is improved than the PCA and LDA performance. v.

6. Locality preserves Projections (Lpp)

LPP can be seen as an option to Principal constituent Analysis (PCA). When the high dimensional data deceit on a low dimensional manifold set in the ambient space, the position protects Projections are obtained by finding the optimal linear approximation for the Eigen purpose of the Laplace Beltrami operator on the various. As a result, LPP shares many of the data symbol properties of nonlinear techniques such as Laplacian Eigenmaps or Locally Linear embeds [15]. vi.

7. Multi Dimensional Scaling (Mds)

Multidimensional scaling (MDS) is a loaner copy for dimensionality reduction. MDS generates low dimensional code placing emphasis on preserving the couple wise distances between the data points. If the rows and the discourse of the data matrix D both have mean zero, the bulge produced by MDS will be the same as that produced by PCA. Thus, MDS is a linear Model for dimensionality decrease having the same limitations as PCA.

vii.

8. Partial Least Squares

Partial smallest amount squares are a classical arithmetical learning method. It is widely used in chemo metrics and Bioinformatics etc. In new years, it is also applied in face acknowledgment and human detection. It can avoid the small sample size problem in linear discriminant analysis (LDA). Therefore it is used as an alternative method of LDA.

9. c) Non Linear Feature Extraction of Dimensionality Reduction Techniques

Non-linear way can be broadly confidently into two groups: a mapping (either from the high dimensional space to lower dimensional embed or vice versa), it can be viewed as a beginning feature extraction step and image is based on neighbor's data such as distance measurements. Investigate on nonlinear dimensionality reduction method has been explored widely in the last few years. In the following, a brief opening to several non-linear dimensionality reduction techniques will be given. i. Kernel Principle Component Analysis (Kpca) Kernel PCA (KPCA) is the reformulation of customary linear PCA in a high-dimensional gap that is constructed using a kernel function. In recent existence, the reformulation of linear technique using the 'kernel trick' has led to the suggestion of winning techniques such as kernel ridge decay and Support Vector machinery. Kernel PCA computes the principal eigenvectors of the kernel matrix, slightly than those of the covariance matrix. The reformulation of usual PCA in kernel space is clear-cut, since a kernel matrix is similar to the in product of the data points in the highdimensional gap that is constructed using the kernel function. The application of PCA in kernel space provides Kernel PCA the possessions of constructing nonlinear mappings.

ii. Isometric Mapping (Isomap) Often of the linear methods do not take the neighboring information end into an account. ISOMAP is a technique that resolves this problem by efforts to preserve pairwise geodesic (or curvilinear) distance between data points. The estimate of geodesic distance divides into two classes. For, adjacent points, Euclidean distance in the input space provides a good approximation to geodesic distance and faraway points, geodesic space can be approximated by adding up a sequence of "short hops" between neighboring points. ISOMAP shares some recompense with PCA, LDA, and MDS, such as computational efficiency and asymptotic meeting guarantees, but with more agility to learn a broad class of nonlinear manifolds [15].

10. iii. Locally Linear Embedding

Locally linear establish (LLE) is another approach which addresses the problem of nonlinear dimensionality decrease by computing low dimensional, neighborhood preserving embedding of highdimensional data. It is a method that is similar to ISOMAP in that it also constructs a chart representation of the data points. It describes the local property of the manifold in the region of a data point x i by writing the data point as a linear combination we (the so-called rebuilding weights) of its k nearest neighbors xij and attempts to retain the reconstruction weights in the linear combinations as well as possible [101] [102].

11. iv. Laplacian Eigenmaps

A directly related approach to locally linear embed is Laplacian eigenmaps. Given t point in «dimensional space, the Laplacian eigenmaps Method (LEM) start by constructing a biased graph with t nodes and a set of edges among adjacent points. Similar to LLE, the area graph can be constructed by finding the k nearest neighbors. The final objectives for both LEM and LLE contain the same form and change only in how the matrix is constructed [101].

12. v. Stochastic Neighbor Embedding

Stochastic Neighbor Embedding (SNE) is a probable move toward that maps high dimensional data tip into a low dimensional subspace in a way that conserve the relative distances to near neighbors. In SNE, alike objects in the high dimensional space will be put near in the low dimensional space, and dissimilar objects in the high dimensional space will usually be put distant apart in the low dimensional gap [102]. A Gaussian distribution centered on a point in the tall dimensional gap is used to define the probability sharing that the data point chooses other data points as its neighbors. SNE is better to LLE in observance the relative distances between every two data points. vi. Semi Definite Embedding (Sde) Semi definite Embedding (SDE), can be seen as a variation of KPCA and an algorithm is base on semi definite training. SDE learns a kernel matrix by maximizing the variance in feature space while preserving the space and angles among nearest neighbors. It has some interesting property: the main optimization is convex and sure to preserve certain aspects of the local geometry; the system always yields a semi positive definite kernel matrix; the eigen spectrum of the kernel matrix provides a guess of the basic manifold's dimensionality; also, the system does not rely on guess geodesic distances between far away points on the manifold. This scrupulous combination of recompense appears unique to SDE.

13. III.

Contemprary affirmation of the recent literature a) Face recognition using 2 Dimensional PCA Sirovich and Kirby [2], [3] first used PCA to proficiently symbolize pictures of character faces. They dispute that any facial image could be renovated approximately as a prejudiced sum of a small group of images that define a facial origin (eigen images), and a mean representation of the face. Within this circumstance, Turk and Pentland [4] accessible the wellknown Eigen faces technique for face recognition in 1991. Since subsequently, PCA has been widely investigated and has turned into one of the most successful move toward in face recognition [5], [6], [7], [8]. Penev and Sirovich [9] converse the problem of the dimensionality of the "face space"while Eigen faces are used for demonstration. Zhao and Yang [10] tried to describe for the arbitrary possessions of illumination in PCA-based apparition systems by generating a diagnostic closed form prescribed of the covariance matrix for the container with a special lighting circumstance and then generalizing to a random illumination via an illumination equation. However, Wiskott et al. [11] piercing out that PCA could not confine still the simplest invariance unless this in sequence is explicitly offered in the training information. They projected a technique known as expandable bunch graph matching to defeat the weaknesses of PCA.

Recently, two PCA-related technique independent constituent analysis (ICA) and kernel principal component analysis (Kernel PCA) contain been of wide apprehension. Bartlett et al. [12] and Draper et al. [13] projected using ICA for face demonstration and found that it was enhanced than PCA when cosines were used as the comparison measure (however, their presentation was not considerably different if the Euclidean detachment is used). Yang [14] used Kernel PCA for face characteristic extraction and acknowledgment and showed that the Kernel Eigen faces technique outperforms the traditional Eigen faces method. However, ICA and Kernel PCA are together computationally more exclusive than PCA. The untried results in [14] demonstrate the ratio of the working out time required by ICA, Kernel PCA, and PCA is, on standard, 8.7: 3.2: 1.0.

In the PCA-based face acknowledgment technique, the 2D face representation matrices must be formerly transformed into 1D representation vectors. The consequential image vectors of faces frequently lead to a high dimensional representation vector space, where it is complicated to assess the covariance matrix accurately owed to its large size and the comparatively small number of preparation samples. Fortunately, the eigenvectors (Eigen faces) can be considered efficiently via the SVD techniques [2], [3] and the procedure of generating the covariance matrix is essentially avoided. However, this does not involve that the eigenvectors can be assessed accurately in this way since the eigenvectors are statistically resolute by the covariance matrix, no substance what method is adopted to gain them.

In this circumstance Jian Yang et al [1] developed a straightforward representation projection procedure, called two-dimensional principle component analysis (2DPCA) for representation feature extraction. As contrasted to conventional PCA, 2DPCA is the pedestal on 2D matrices slightly than 1D vectors. That is, the representation matrix does not require to be previously altered into a vector. Instead, a representation covariance prevailing conditions can be constructed openly using the original representation matrices. In contrast to the covariance matrix of PCA, the dimension of the image covariance matrix via 2DPCA is much smaller. As a consequence, 2DPCA has two significant advantages over PCA. First, it is easier to assess the covariance matrix precisely. Second, less time is necessary to determine the equivalent eigenvectors.

Observation: A new procedure for image feature withdrawal and demonstration two-dimensional principal constituent analysis (2DPCA) was urbanized. 2DPCA has many rewards over conventional PCA (Eigen faces). In the primary place, since 2DPCA is pedestal on the image matrix, it is simpler and further straight onward to use for image feature withdrawal. Second, 2DPCA is enhanced than PCA in terms of gratitude accuracy in all research. Although this trend appears to be consistent for dissimilar databases and conditions, in some research the differences in the presentation were not statistically momentous. Third, 2DPCA is computationally further efficient than the PCA and it can pick up the speed of image attribute extraction considerably. However, it must be pointed out that 2DPCA-based image demonstration was not as capable as a PCA in terms of storage necessities, since 2DPCA requires further coefficients for image demonstration than PCA. There are tranquil some aspects of 2DPCA that earn further study. When a diminutive number of the primary components of PCA are worn to represent a representation, the mean square error (MSE) among the approximation and the unique pattern is negligible. Does 2DPCA have a comparable property? In accumulation, 2DPCA needs extra coefficients for image demonstration than PCA. Although, as a sufficient alternative to contract with this difficulty is to use PCA after2DPCA for extra dimensional reduction, it is motionless unclear how the width of 2DPCA could be summarized directed.

IV.

14. Face recognition using kernel based PCA

Popular demonstration methods for face acknowledgment include Principle Component Analysis (PCA) [16], [17], [18], shape and consistency ('shapefree' representation) of faces [10], [5], [21], [22], [23], and Gabor wavelet demonstration [24], [25], [26], [27], [28]. The discrimination technique often tries to achieve the function of high separability among the different model in whose classification one is concerned [18], [29]. Commonly used intolerance methods contain Bayes classifier and the MAP rule [30], [28], Fisher Linear Discriminant (FLD) [31], [4], [33], [23], and further recently kernel PCA technique [34], [38], [35], [36].

Chengjun Liu et al [1] offered a novel Gaborbased kernel principle Component Analysis (PCA) technique by integrating the Gabor wavelet demonstration of face images and the kernel PCA technique for face recognition. Gabor wavelets [25], [37] principle derives attractive facial features distinguish by spatial frequency, spatial locality, and direction selectivity to cope with the dissimilarity due to illumination and facial appearance changes. The kernel PCA technique [38] is then extended to include a fractional power polynomial replica for enhanced face recognition presentation. A fractional power polynomial, though, does not necessarily describe a kernel function, as it may not define a constructive semi-definite Gram matrix. Note that the sigmoid kernels , one of the three modules of widely used kernel occupation (polynomial kernels, Gaussian kernels, and sigmoid kernels), do not essentially define a constructive semi-definite Gram matrix, either [38]. Nevertheless, the sigmoid kernels contain been effectively used in practice, such as in edifice support vector machines. In regulate to derive real kernel PCA skin, we apply only those kernel PCA eigenvectors that are connected with constructive eigenvalues.

Observation: Chengjun Liu et al [1] initiate a novel Gabor-based kernel PCA technique with fractional supremacy polynomial models for forward and poseangled face acknowledgment. Gabor wavelets first obtain desirable facial features distinguish by spatial frequency, spatial neighborhood, and orientation selectivity to survive with the variations due to clarification and facial appearance changes. The kernel PCA technique is then extended to comprise fractional power polynomial models for superior face recognition presentation. The feasibility of the Gabor-based kernel PCA technique with fractional power polynomial replica has been effectively tested on both fore and poseangled face recognition, via two data sets from the FERET catalog and the CMU PIE catalog, respectively.

V.

15. Gabor filters and KPCA for Face Recognition

Over the previous ten years, many approaches enclose been attempted to decipher the face recognition difficulty [40] - [52]. One of the very flourishing and popular face acknowledgment methods is based on the principle components psychiatry (PCA) [40]. In 1987, Sirovich and Kirby [40] demonstrate that if the eigenvectors equivalent to a set of training face images are achieved, any image in that database can be optimally modernized using a linear weighted grouping of these eigenvectors. Their exertion explored the demonstration of human faces in a lowerdimensional subspace. In 1991, Turk and Pentland [17] worn these eigenvectors (or Eigen faces as they are identified) for face acknowledgment. PCA was used to yield shelf directions that exploit the total scatter across all faces in the preparation set. They also extended their loom to the real time acknowledgment of a moving face illustration in a video sequence [53]. Another admired scheme for the dimensionality decline in face recognition is owing to Belhumeur et al. [4], Etemad and Chellappa [48], and Swets and Weng [31]. It is a pedestal on Fisher's linear discriminant (FLD) analysis. The FLD uses division membership in sequence and develops a set of attribute vectors in which variations of dissimilar faces are emphasized while dissimilar instances of a face due to clarification conditions, facial expressions and orientations are de-emphasized. The FLD technique deals directly with inequity among classes whereas the eigen face acknowledgment (EFR) method deals with the information in its entirety without paying any exacting attention to the underlying class organization. It is generally supposed that algorithms pedestal on FLD are better to those based on PCA when adequate training samples are accessible. But as exposed in [54] this is not constantly the case.

Methods such as EFR and FLD exertion quite well offer the input test pattern is a countenance, i.e., the face representation has already been harvested out of a scene. The difficulty of recognizing faces in motionless images with a cluttered setting is more general and complicated as one does not recognize where a face pattern might emerge in a given representation. A good face recognition scheme must own the following two properties. It should: 1) Detect and distinguish all the faces in a prospect, and 2) No tainted classification of localized patterns as faces.

Since faces are frequently sparsely dispersed in images, even a hardly any false alarms will cause to be the scheme ineffective. Also, the performance must not be too receptive to any threshold selection. Some effort to address this condition is discussed in [17], [30] wherever the use of reserve from eigen face spaces (DFFS) and reserve in eigen face gaps (DIFS) are suggested to distinguish and eliminate unrelated faces for vigorous face recognition in a muddle. In this revise, we show that DFFS and DIFS by themselves (in the non appearance of any in sequence about the background) are not adequate to discriminate against random background patterns. If the porch is set high, conventional EFR invariably ends up absent faces. If the threshold is subordinate to capture the face, the procedure incurs many counterfeit alarms. Thus, the proposal is quite susceptible to the option of the threshold value.

One possibility looms to handle muddle in still images is to use a superior face detection component to find face prototype and then feed only these prototype as inputs to the traditional EFR proposal. Face detection is a study problem in itself and different approaches exist in the prose [55], [56], [57]. Most of the work imagines the pose to be forward. For a recent and inclusive survey of face recognition techniques, see [58], [59]. Rajagopalan et al [39] projected a new methodology inside the PCA framework to robustly distinguish faces in a given test representation with background muddle (see figure 2). Toward this end, assemble an "eigen background space" which symbolize the distribution of the conditions images equivalent to the given analysis image. The background is educated "on the fly" and provides a resonance basis for eradicating false alarms. An appropriate outline classifier is resulting and the eigen conditions space together with the eigen face gap is used to concurrently detect and distinguish faces. Linear subspace analysis, which regard as a feature space as a linear arrangement of a set of bases, has been extensively used in face acknowledgment applications. This is generally due to its usefulness and computational efficiency for aspect extraction and demonstration. Different criteria will construct different bases and, accordingly, the transformed subspace will also have dissimilar properties. Principal constituent analysis (PCA) [16], [17] is the most admired technique; it produces a set of orthogonal bases that confine the directions of maximum discrepancy in the training information, and the PCA coefficients in the subspace are not associated. PCA can preserve the global configuration of the image gap, and is optimal in terms of demonstration and reconstruction. Because simply the second-order addiction in the PCA coefficients are abolished, PCA cannot capture even the simplest invariance except this in sequence is explicitly offered in the training information [64]. Independent constituent analysis (ICA) [65], [28] can be considered a simplification of the PCA, which aims to find some selfgoverning basis by methods receptive to high-order statistics. However, [67], [68] description that ICA gave the same, occasionally even a little worse, acknowledgment accuracy as PCA. Linear discriminant psychiatry (LDA) [4] seeks to find a linear conversion that maximizes the between-class distribute and minimizes the within-class distribute, which preserve the discriminating in sequence and is suitable for acknowledgment. However, this method needs further than one image per person as a preparation set; furthermore, [54] shows that PCA can better LDA when the training set is small, and the previous is less sensitive to different preparation sets. Locality preserving protuberance (LPP) [71] obtains a face subspace that finest detects the necessary face manifold structure, and conserve the local in sequence about the image gap. When the proper aspect of the subspace is selected, the acknowledgment rates using LPP are enhanced than those using PCA or LDA, based

( D D D D ) F 2012Year on dissimilar databases. However, this termination is achieved only if multiple preparation samples from each person are obtainable; otherwise, the LPP will give a comparable performance level as PCA. With the Cover's theorem, nonlinearly distinguishable patterns in an effort space will become linearly distinguishable with a high prospect if the input space is transformed nonlinearly into a high-dimensional characteristic space [72]. We can, therefore, map a contribution image into a highdimensional characteristic space, so that linear discriminant methods can then be engaged for face acknowledgment. This mapping is usually recognized via a kernel function [38] and, according to the technique used for recognition in the high-dimensional characteristic space, we have a set of kernel-based technique, such as the kernel PCA (KPCA) [38], [34], [36], [76] or the kernel Fisher discriminant psychiatry (KFDA) [77], [78], [79], [80]. KPCA and KFDA are linear in the high-dimensional characteristic space, but nonlinear in the low-dimensional representation space. In other expressions, these methods can determine the nonlinear structure of the face descriptions, and encode higher order information [76]. Although kernel-based technique can overcome many of the confines of a linear transformation, [71] piercing out that none of these methods openly consider the structure of the various on which the face images perhaps reside. Furthermore, the kernel purpose used are devoid of explicit physical connotation, i.e., How and why a kernel purpose is suitable for an outline of a human face, and how to gain a nonlinear organization useful for discrimination.

In this context, Xudong Xie et al [60] projected a novel method for face acknowledgment, which uses only image per person for training, and is vigorous to lighting, expression and perception variations. In this technique, the Gabor wavelets [28], [81], [82] are worn to extract facial skin, then a Doubly nonlinear plot Kernel PCA (DKPCA) is proposed to complete the feature conversion and face recognition. Doubly nonlinear plot means that, besides the predictable kernel purpose, a new mapping purpose is also defined and used to accentuate those features having superior statistical probabilities and spatial significance of face images. More purposely, this new mapping function regard as not only the statistical allocation of the Gabor features, but also the spatial in sequence about human faces. After this nonlinear plot, the transformed features have a superior discriminating power, and the significance of the feature adapts of the spatial significance of the face images. Therefore, it has the capability to reduce the effect of characteristic variations owing to illumination, appearance and perspective interruption.

Observation: Xudong Xie et al [60] dispute that in the context of facial expressions as features to distinguish faces PCA based face acknowledgment models are not constant. Hence projected a novel especially nonlinear mapping Gabor-based KPCA for human countenance recognition. In this loom, the Gabor wavelets are used to mine facial features, then a particularly nonlinear mapping KPCA is projected to perform feature conversion and face recognition. Compared with the conservative KPCA, an additional nonlinearly mapping is carried out in the original space. Our new nonlinear plot not only considers the arithmetical property of the input skin texture, but also adopts an eigen mask to accentuate those features derived from the significant facial feature points. Therefore, after the mappings, the distorted features have a higher discriminant supremacy, and the significance of the feature adapts of the special significance of the face image. In categorize to improve the face recognition accurateness Jie ZOU et al [61] proved that merge multi-scale Gabor features or multiresolution LBP skin generally achieves higher categorization accuracy than the character feature sets, which is not measured in the model projected by Xudong Xie et al [60], also not measured that Gabor features are susceptible to high incline and their orientations.

16. VI.

17. ICA and PCA compatibility for Face Recognition

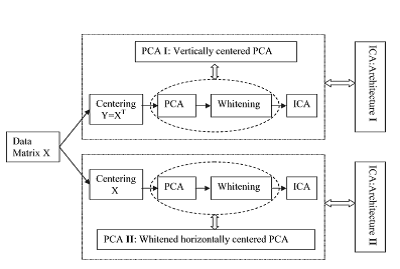

Recently, a technique closely related to PCA, self-governing component analysis (ICA) [83], has acknowledged wide attention. ICA can be observed as a generalization of PCA, while it is concerned not only with second-order addiction between variables but also with high-order dependencies among them. PCA makes the information un-correlated while ICA makes the information as independent as potential. Generally, there are two influences for using ICA for face demonstration and recognition. First, the high-order associations between image pixels may hold information that is important in acknowledgment tasks. Second, ICA seeks to find the guidelines such that the projections of the information into those directions contain maximally "non-Gaussian" distributions. These ridges may be interesting and useful in categorization tasks [83], [86]. Bartlett et al. [84], [65] here along with the first to apply ICA to face representation and appreciation. They used the Infomax algorithm [87], [88] to realize ICA and recommended two ICA architectures (i.e., ICA Architectures I and II) for face demonstration. Both architectures were appraised on a subset of the FERET face record and were found to be successful for face recognition [65]. Yuen and Lai [90], [91] assume the fixed-point algorithm [89] to attain the independent mechanism (ICs) and used a householder transform to increase the least square solution of a face representation for representation. Liu and Wechsler [92], [28], [94] worn an ICA algorithm given by frequent [100] to perform ICA and assessed its presentation for face recognition. All of these researchers maintain that ICA outperforms PCA in face acknowledgment. Other researchers, though, reported differently. Baek et al. [95] description that PCA outperforms ICA as Moghaddam [36] and Jin and Davoine [97] reported no major performance difference between the two methods. Socolinsky and Selinger [98] description that ICA outperforms PCA on observable images but PCA outperforms ICA on infrared descriptions. [99] endeavor to account for these actual contradictory results. They retested ICA and PCA on the FERET face catalog with 1196 individuals and completed a comprehensive assessment of the performances of the two techniques and found that the relative presentation of ICA and PCA generally depends on the ICA architecture and the detachment metric. Their investigations consequences showed that: 1) ICA Architecture II with the cosine detachment significantly outperforms PCA with L1 (city wedge), L2 (Euclidean), and cosine detachment metrics. This is dependable with Bartlett and Liu's results; 2) PCA with the L1 detachment outperforms ICA Architecture I. This is an errand of Baek's results; and 3) ICA was planning II with L2 still significantly outperforms PCA with L2, even if the degree of consequence is not as great as in the ICA Architecture II with cosine over PCA. Moreover, it must be noted that this last consequence is still inconsistent among Moghaddam and Jin's results. An interesting by merchandise of comparative examine into ICA and PCA is the finding that dissimilar versions of ICA algorithms seem to execute equally in facerecognition errands. Moghaddam [36] show that the basis images resulting from Hyvärinen's fixed-point algorithm is very similar to those from Cardoso's JADE algorithm [104]. Draper et al. [99] substantiate that the presentation dissimilarity between Infomax algorithm [87] and FastICA [89], [103] is irrelevant.

The preceding researchers [84], [99] usually use standard PCA as the baseline algorithm to assess ICA-based face-recognition scheme. This, however, begs the difficulty as to whether typical PCA is a good choice for appraising ICA. The ICA process, as exposed in Fig. 1, involves not only a PCA procedure but also a whitening treads. After the whitening tread, we get the whitened PCA skin tone of information. How is the presentation of these whitened PCA features in disparity to standard PCA features and ICA features? This concern has not been addressed yet. The purpose of the whitening step, mainly its potential effect on the recognition presentation, is still unclear. In the container where the performance of ICA is considerably different from that of PCA, it is critically significant to determine what causes this dissimilarity, whether it is the whitening procedure or the succeeding pure ICA projection.

If the whitened PCA skin texture can perform as well as ICA features, it is definitely unnecessary to use a computationally exclusive ICA projection for additional processing. It seems that typical PCA is not as an appropriate baseline algorithm as "PCA + Whitening" (whitened PCA) for assessing ICA.

In this circumstance, Jian Yang et al [82] evaluate two ICA-based image representation architectures (see figure 3) and get that ICA Architecture I involves a vertically centered PCA progression (PCA I), while ICA planning II involves a whitened flat centered PCA progression (PCA II). Therefore, it is usual to use these two PCA descriptions as baseline algorithms to check the performance of ICA-based face-recognition scheme. It should be confirmed that in this correspondence, our objective is not to find whether ICA or PCA is enhanced but to investigate first what position the PCA whitening stride and centering mode cooperate in the ICA-based face recognition scheme and second what effect the pure ICA protuberance has on the presentation of face recognition. We also consider how the performances of two ICA architectures depend on their correlated PCA versions. It is hoped that this examination may clarify why ICA outperforms PCA in some cases and why not in additional cases. Observation: By examining two ICA-based image depiction architectures and establish that Ist ICA Architecture absorb a vertically centered PCA process (PCA I), while IInd ICA Architecture involves a whitened flat centered PCA process (PCA II). In this procedure then used these two PCA descriptions as baseline algorithms to reconsider the performance of ICA-based face-recognition scheme. From the testing results explored, it is considerable to conclude that ? First, there is no important performance dissimilarity between ICA Architecture I (II) and PCA I (II), though in some cases, there is a significant dissimilarity between ICA Architecture (II) and typical PCA. ? Second, the presentation of ICA strongly depends on the PCA procedure that it involves. Pure ICA outcrop seems to have only a trivial effect on presentation in face recognition. ? Third, the centering manner and the whitening step in the PCA I (or II) play a vital role in inducing the presentation differs among ICA Architecture I (II) and typical PCA.

The added selective power of the "independent features" fashioned by the pure ICA ledge is not so satisfying. Therefore, the prospect task is to explore successful ways to attain more power self-governing features for face demonstration.

18. VII.

Super-resolution as a feature in Face recognition

Super-resolution is flattering gradually more important for several multimedia applications [106]. It refers to the process of rebuilding a high-resolution image from low-resolution frames. Most techniques [107], [108], [109], [110], [111] assume knowledge of the statistical warp of each study and the nature of the blur. However, the efficiency of such rebuilding-based super-resolution algorithms, that do not include any exact previous information concerning the image being super-resolved, has been exposed to be inherently partial [112], [113]. A learning-based method has been recommended in [112] to super-resolve face images. It uses a prioritized based on the fault among the gradient values of the corresponding high-resolution pixel in the training image and in the expected image. But this makes it sensitive to image arrangement, scale, and noise. Gunturk et al. [114] perform super-resolution in the eigen face spaces. Since their aim is face recognition, they rebuild only the weights along the principal components in its place of trying to make a high-resolution approximation that is visually superior. In [115], a method exists which super-resolves face by first finding the finest fit to the comments in the eigen face domains. A patch-based Markov network is then used to attach remaining high-frequency content. Some additional learning-based approaches are discussed in [116], [117], [118].

In this environment a substantial model referred as "learning-based method for super-resolution of faces that uses kernel principal component analysis (PCA) to get previous knowledge concerning the face class" introduced by Ayan Chakrabarti et al [105]. Kernel PCA is a nonlinear extension of traditional PCA for capturing higher-order correlations in a data set. The proposed model is using kernel PCA to take out valuable previous information in a computationally well-organized manner and shows that it can be used within a maximum a posteriori (MAP) framework along with the observation model for improving the quality of the super-resolved face image.

Observation: Ayan Chakrabarti et al [105] proposed a learning-based method for super-resolution of face images that use kernel PCA to construct a previous model for frontal face images. This model is used to normalize the rebuilding of high-resolution face images from blurred and noisy low-resolution remarks. By nonlinearly mapping the face images to a higherdimensional characteristic space and performing PCA in the characteristic space, we capture higher-order correlations there in face images. The presentation of the proposed Kernel-base face hallucination is required to be confirmed by competing with low resolution (LR) face image and the rebuild high resolution (HR) image recognition models. This method is based on global approaches in the sense that processing is done on the entire of LR images concurrently. This inflicts the constraint that all of the training images should be internationally similar, which terminate that they should be a similar class of objects. Therefore, the global approach is appropriate for images of an exacting class such as facial images and fingerprint images. However, since the global approach needs the supposition that all of the training images are in the same class, it is hard to apply it to arbitrary images. In the similar context, an application of the Hebbian algorithm is described, where kernel PCA is used for image zooming by prognostic an interpolated version of the low-resolution image onto the high-resolution principal subspace. The method is, however, partial to using a single image and does not include any knowledge of the imaging process.

19. VIII.

20. Face sketches recognition

Face sketching is a forensic method that has been regularly used in criminal investigations [120], [121]. The achievement of using face sketches to recognize and capture fugitives and criminal suspects has often been revealed in the media coverage, mainly for high-profile cases [122], [123]. As a special forensic art, face sketching is usually done manually by police sketch artists. As an effect of fast advancements in computer graphics, realistic animations, human computer interaction, visualization, and face biometrics, complicated facial composite software tools have been manufacturing and utilized in law enforcement agencies. A latest national survey has pointed out that about 80% of state and local police departments in the U.S. have used the facial composite software, and about 43% of them still relied on trains forensic artists [124].

However, there are concerns regarding the correctness of face sketches, mainly those generated by software. Studies have shown that software kits were lower to well-trained artists [125], [126]. One of the disadvantages of composite systems is that they follow a "piecemeal" approach by adding up facial features in a remote manner. In contrast, artists tend to use a more "holistic" plan that highlight the overall structure. Considerable hard work has been made to put together holistic dimensions into composite systems, using rated psychological parameters and clever to develop face models [127], [128]. Recently, a caricaturing procedure has been employed to additional progress the presentation of facial composite systems [129]. The quality of a sketch (whether by software or an artist) is dependent upon a lot of factors such as an artist's drawing skill and experience, the exposure time for a face, and uniqueness of a face, as well as the memory and emotional position of eyewitnesses or victims [120], [121], [125], [130], [131]. The impacts of these factors on sketch excellence and their complex interrelationships have not been well understood on a quantitative basis.

Sketch-recognition research is powerfully provoked by its forensic applications. The previous works include a study of matching police sketches to mugshot photographs [132]. Sketches were first altered into pseudo photographs through a sequence of standardizations and were then evaluated with photographs in an eigenspace. Tang and Wang [133] reported a further complete investigation on hand-drawn face sketch recognition. They developed a photographto-sketch alteration method that synthesizes sketches from the original photographs. The method improves the resemblance between the sketches drawn by artists and the synthesized sketches. They also establish that the algorithms performed competitively with humans using those sketches. In [134] and [135], they further proposed an altered function that treats the shape and texture individually and a multi scale Markov random field model for sketch synthesis. Recently, a study on searching sketches in mugshot databases has been reported [136]. Sketch-photograph identical was performed using a set of extracting local facial features and global capacity. Sketches were drawn with composite software, and no alteration was applied to sketches or photographs. Along a a little different research line of using caricature model for face representation and recognition, Wechsler et al. [137] provided a framework based on the self-organization characteristic map and found that caricature maps can improve the differences between subjects and hence, enhance the recognition rate.

Information fusion is a significant method for improving the presentation of various biometrics [138], [62], [85], including face, fingerprint, voice, ear, and gait. Bowyer et al. [69] have established that a multi sample approach and a multimodal approach can accomplish the same level of performance. Large increases in face-recognition correctness were also reported in studies of multiple video frame fusion [47], [32], [42]. In research of evaluating face composite recognition [96], it was establish out that the mixture of four composite faces through morphing was rated improved or as good as the best individual face. Therefore, it is natural to argue that the fusion of multiple sketches may also add to the chance of finding a correct sketch-photograph match. Multi sketch fusion can be carried out using the sketches from the similar artist or the sketches from different artists.

Yong Zhang et al [119] motivated by face composite recognition [96] suitable to its potentiality to offer more diverse information regarding a face. And then performed a qualitative approach to analyze Hand-Drawn Face Sketch Recognition by Humans and a PCA-Based Algorithm for Forensic Applications. Another issue that subjective the work carried out by Yong Zhang et al [119] is, if the sketches resultant from different eyewitnesses are assumed to be mostly non correlated, multi sketch fusion may cancel out definite recognition errors.

With these influencing factors Yong Zhang et al [119] study the efficiency of hand-drawn sketches by comparing the performances of human volunteers and a principle component analysis (PCA) -based algorithm. In the process of making simpler the task, the sketches were obtained under an "ideal" condition:

Artists drew sketches by looking at the faces in photographs without a time constraint. This type of sketches permits us to address some basic issues that are of interest to both criminal investigators and researchers in biometrics and cognitive psychology: 1) Does the face sketch recognition rate alter very much from one artist to another? If so, we may harness the inter artist difference through a multi sketch fusion method; 2) The ideal sketches can be used to set up a recognition baseline to benchmark the performance of sketches that are drawn under a more forensically sensible condition; and 3) In a sketch-photograph matching, does human vision use a certain sketch or photo metric cues more power than a computer algorithm, or vice versa? What kinds of sketch features are more informative to human vision or the algorithm? How can the forensic artists and composite software developers advantage of the findings?

Volume XII Issue XIII Version I Observation: By the qualitative study explored by Yong Zhang et al [119], we can observe that 1) There is a big inter artist difference in terms of sketch recognition rate, which is likely associated with the drawing styles of artists rather than their talent. 2) Since multi sketch fusion can considerably develop the recognition rate as being observed in both PCA tests and human evaluations, using multiple artists in a criminal investigation is suggested. 3) Other than the correctness of major sketch lines, pictorial details such as shadings and skin textures are also helpful for recognition. 4) Humans showed a better performance with the cartoon like sketches (considered as more difficult), given the particular data set used in this study. However, considering the fact that a PCA algorithm is more sensitive to intensity difference, it is not clear whether human vision is more broadminded about face degradation in general. More study efforts are wanted, mainly those that use shape information extracted by an active appearance model. 5) Human and PCA performances seem gently correlated, based on the correlation analysis results, although experiments relating more artists and sketch samples are required. 6) Score level fusion with the sum rule seems efficient in combining sketches of dissimilar styles, at least for the case of a small number of artists. 7) PCA did a better job in recognizing sketches of less characteristic features, while humans utilized tonal cues more professionally. However, cautions should be taken when commerce with sketches that have been processed by advanced alteration functions [133], [134] because those functions may alter the textures and hence, the tonality of a sketch considerably. 8) It is value mentioning that sketch-photograph matching is more demanding than photographphotograph matching because a sketch is not a simple copy of a face but rather the one apparent and rebuild by an artist. Therefore, we may have much more to increase by examining how humans and computer recognize sketches and caricatures. 9) One significant issue is that the sketches that are drawn based on the verbal descriptions of eyewitnesses may effect in a much inferior recognition rate because of the uncertainties related to the memory loss of eye witnesses. Therefore, more thorough investigations are wanting to address different issues related to sketching recognition under a forensically realistic setting, such as the impact of target delay [45]. In [45], face building was conducted with a two-day delay, and the manually generated sketches have been found

to be outperformed other traditional face build methods. 10) Another talented research direction is to rebuild a 3-D sketch model from the original 2-D sketches.

Using the 3-D model, a series of 2-D sketches of dissimilar view angles can be generated to make easy the identified purpose. This 3-D modeling approach can be helpful in the cases where a subject was non cooperative and observed at a distance [41].

IX.

21. Conclusion

One of the face recognition methodology is the holistic approach that takes the whole face image as a raw data and recognizes the face. In other methodology referred as feature based approach, the objectives of a face like mouth, nose and eyes are extracted and then attempt to recognize the face. The third methodology labelled as hybrid approach is the combination of both the Holistic and feature based methods. This paper concentrated on the contemporary affirmation of the recent literature on face recognition techniques. The three processes necessarily are done are face detection, dimensionality reduction and face recognition. The dimensionality reduction is used to solve the curse of dimensionality. It can be divided into two parts they are Feature Extraction and Feature Selection. The feature extraction process can be broadly classified into four types they are linear method, nonlinear methods, Multi linear methods and tensor space methods. Here in this paper we reviewed the information about the various methods included in the linear and nonlinear feature extraction process.

PCA, LDA and ICA are the most well known linear feature extraction process for the past more than 10 years whereas KPCA, ISOMAP, LLE are the famous technique in non linear feature extraction. Now the researchers are concentrating on combining both linear and nonlinear methods to reduce the dimensionality reduction and also for feature extraction methods. The contribution of this paper is to identify the research scope in face recognition methods and given details about the models that are cited in recent literature. Though There are so many techniques available, still there are issues such as higher level dimensionality, resolution changes and divergent expressions, which is an evidence of future research scope in face recognition systems. The future work will concentrate on the issues claimed.

![[63][19][19][49][20]. MPCA and KPCA are fully based on the PCA technique.](https://computerresearch.org/index.php/computer/article/download/307/version/100538/6-Face-Recognition-Methodologies-Using_html/8289/image-5.png)

![[15][93][66][74][73][75][46][70].](https://computerresearch.org/index.php/computer/article/download/307/version/100538/6-Face-Recognition-Methodologies-Using_html/8290/image-6.png)

![Fig. 1 : Illustration of the ICA progression for feature removal and classificationRecently, Draper et al.[99] endeavor to account for these actual contradictory results. They retested ICA and PCA on the FERET face catalog with 1196 individuals and completed a comprehensive assessment of the performances of the two techniques and found that the relative presentation of ICA and PCA generally depends on the ICA architecture and the detachment metric. Their investigations consequences showed that: 1) ICA Architecture II with the cosine detachment significantly outperforms PCA with L1 (city wedge), L2 (Euclidean), and cosine detachment metrics. This is dependable with Bartlett and Liu's results; 2) PCA with the L1 detachment outperforms ICA Architecture I. This is an errand of Baek's results; and 3) ICA was planning II with L2 still significantly outperforms PCA with L2, even if the degree of consequence is not as great as in the ICA Architecture II with cosine over PCA. Moreover, it must be noted that this last consequence is still inconsistent among Moghaddam and Jin's results. An interesting by merchandise of comparative examine into ICA and PCA is the finding that dissimilar versions of ICA algorithms seem to execute equally in facerecognition errands. Moghaddam[36] show that the basis images resulting from Hyvärinen's fixed-point algorithm is very similar to those from Cardoso's JADE algorithm[104]. Draper et al.[99] substantiate that the presentation dissimilarity between Infomax algorithm[87] and FastICA[89],[103] is irrelevant.The preceding researchers[84],[99] usually use standard PCA as the baseline algorithm to assess ICA-based face-recognition scheme. This, however, begs the difficulty as to whether typical PCA is a good choice for appraising ICA. The ICA process, as exposed in Fig.1, involves not only a PCA procedure but also a whitening treads. After the whitening tread, we get the whitened PCA skin tone of information. How is the presentation of these whitened PCA features in disparity to standard PCA features and ICA features? This concern has not been addressed yet. The purpose of the whitening step, mainly its potential effect on the recognition presentation, is still unclear. In the container](https://computerresearch.org/index.php/computer/article/download/307/version/100538/6-Face-Recognition-Methodologies-Using_html/8292/image-8.png)