1. INTRODUCTION

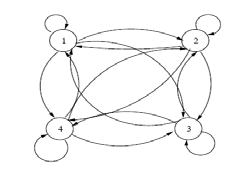

utomatic recognition of handwritten characters is the subject of several research for several years. This has several applications: In the field of multimedia and the compression of image etc... Automatic recognition of a Tifinagh character is done in three steps: the first phase is that of pretreatment to reduce noise, the second for extraction of characteristics and the third to make the classification (Neural Networks, Hidden Markov Model, Hybrid Model MLP/HMM). Neural networks are a system of calculate widely used for the recognition of images [1,2,3,4]. In learning we use the gradient descent algorithm, in Hidden Markov Model we use a vector of extraction as a suite of observation and we seeks to maximize the model with the best probability. The Baum-Welch algorithm is used to learning. And for the Hybrid model MLP/HMM we considered the output of the neural networks as a probability of emission for the hidden markov model. We propose a system of recognition implemented on a base of Tifinagh characters manuscripts. This paper is organized as follows: Section 2 is devoted to the test database. In section 3, we describe the method of characteristics extraction based on mathematical morphology. In section 4, we present an overview on the neural networks MLP. Experimental results of neural networks are presented in section 5. The hidden Markov model HMM is presented in section 6. Experimental results on the hidden Markov model will be presented in section 7. In section 8, we present the hybrid model MLP/HMM. Recognition system is illustrated in the following figure (Figure 1).

2. TIFINAGH DATABASE

The database used is Tifinagh, it is composed of 7200 characters Tifinagh (manuscripts + Imprimed) (1800 in learning and 5400 in test). The number of characters according to IRCAM Tifinagh is 33 characters. Example of IRCAM Tifinagh characters:

3. P REPROCESSING

In the preprocessing phase, the images of handwritten characters are rendered digital with a scanner, then it make the binary image with thresholding. After standardization for image extracted in a square the size standard is: 150 x 150. Is determined for each character the discriminating parameters (zones). The characteristic zones can be detected by the intersections of dilations found to the East, West, North and South. We define for each image five types of characteristic zones: East, West, North, South, and Central zone. A point of the image (Figure 5) belongs to the East characteristic zone (Figure 6) if and only if:

-This point does not belong to the object (the white pixels in image). -From this point, moving in a straight line to the East, we do not cross the object.

-From this point, moving in a straight line to the south, north and west one crosses the object ( A point of the image (Figure 11) belongs to the North characteristic zone (Figure 12) if and only if:

-This point does not belong to the object (the white pixels in image). A point of the image (Figure 13) belongs to the Central characteristic zone if and only if:

-This point does not belong to the limit of the object.

-From this point, moving in a straight line to the south, north, east and west we cross the object. The result of the extraction is illustrated in the (FIG. 13).

Figure 13 :

4. V. NEURAL NETWORKS

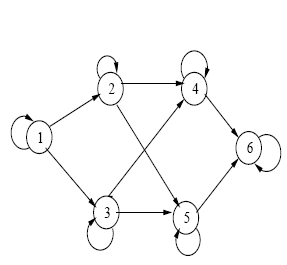

The neural networks [8,9,10,11,12] based on properties of the brain to build systems of calculation best able to resolve the type of problems as human beings live know resolve. They have several models, one of these models is the perceptron. a) Multi -layer perceptron (MLP) [13,14,15] The classification phase is as follows:

The number of neurons in the network is: -Five neurons in the input layer (the number five corresponds to the values found in the vector of extraction).

-Eighteen neurons in the output layer (the number eighteen corresponds to the number of characters used). -The number of neurons in the hidden layer is selected according to these three conditions: -Equal number of neurons in the input layer.

-Equal to 75% of number of neurons in the input layer. Equal to the square root of the product of two layers of exit and entry.

According to these three conditions are varied the number of neurons of layer hidden between five and ten neurons. The method used for learning is the descent of the gradient (gradient back-propagation algorithm) [16,17,18,19,20].

5. Layers

6. CLASSIFICATION

After extracting the characteristic data of the input image, we will classify its data with neural networks (Multilayer perceptron). We must compute the coefficients of weight and desired outputs.

7. a) Experimental Results

The values of characteristic vectors obtained in the extraction phase are introduced at the input of the neural network, and we know the desired output and the network is forced to converge to a specific final state (supervised learning). Each character is characterized by a vector of five components. For the formation of the network (multi-layer perceptron MLP), we started by a set of eighteen images, and we finished with 50 sets of 900 images, to find the best parameters that maximizes network (Figure .

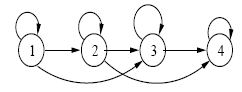

P(X t =q it |X t -1 = qi t-1 ,?,X 1 = qi 1 )=P(X t =qi t |X t-1 = q it-1 ,?,X t -n = qi t-n ) b) Stationary chainA Markov chain of order 1 is stationary if for any t and k there is: P(X t =q i |X t -1 = q j )= P(X t+k =q i |X t+k -1 = q j ) In this case, it defines a transition probability matrix A = (a ij ) such as: a ij =P(X t =q j |X t-1 = q i ) At a time given a any process. -Emission of the end of the observation O (t+1: t). The evaluation of the observation is given by: f) Recognition:

Knowing the class to which belongs the character it is compared to models ? k , k = 1, ??, L of its class. The selected model will be the one to provide the best probability corresponding to the evaluation of its suite of primitive i.e: max (P(O/ ? k ))

With O: The suite of observation in this work is the vector of extraction.

? k : is the Markov model consisting of the transition matrix A, the observation matrix B and boot matrix ?i.

Ou Q={q 1 ,?.,q n } represents all the States. For the classification with hidden Markov model, considering the values of the characteristic vectors obtained in recognition of the characters as a sequence of observations, it initializes the model and is sought with the Baum-Welch algorithm (learning) to find the best probability that maximizes the parameters of the model (A: the transition matrix, B: the observation matrix, ?i: the boot matrix). Each character is characterized by a vector of five components extraction. The experimental results are illustrated in the following figure (Figure 19).

8. Normalisation

Extraction of Vector Image

9. Numbers of observation

10. HMMs Recognition

11. HYBRID MODEL MLP/HMM

Under certain conditions, neural networks can be considered statistical classifiers by supplying output of a posteriori probabilities. Also, it is interesting to combine the respective capacities of the HMM and MLP for new efficient designs inspired by the two formalisms a) The hybrid system A perceptron can provide the probabilities of belonging P(ci/x(t)) a vector model x (t) to a class ci. Several systems have been developed on this principle [22]. These systems have many advantages over approaches purely Markov. However, they are not simple to implement because of the number of parameters to adjust and the large amount of training data necessary to ensure the global model. In this section, we show how our hybrid system is designed. The architecture of the system consists of a multilayer perceptron upstream to a type HMM Bakis left-right. The hybrid system, including the figure 20 shows the overall design scheme to provide probabilities of belonging to different classes. The system is composed of two modules: a neural module and a hidden Markov module.

The observations being the various classes, we associate with each State an obs ervation. Also our goal is to find the best way of maximizing the probability of a sequence of observations, the method used for recognition with the hybrid model is the method used in (section 7.6) for the hidden markov model. The HMM has in input two matrices and a vector: a matrix of transition probabilities, a matrix of emission probabilities which is the output of the MLP (posterior probabilities), and a vector representing the initial probability of States. b) Results of the hybrid system

12. CONCLUSION

In this work, we use a method based on neural networks (multi-layer perceptron and back-propagation), the hidden Markov model and hybrid model MLP + HMM for the classification of manuscripts Tifinagh characters. A technique of extraction based on mathematical morphology is used in the phase of extraction of characteristics before the implementation in classification of characters. We prove that the HMM and MLP approaches can be a reliable method for the classification. We introduced the hybrid system to make more intelligent classification process by modeling the output of the neural network as probabilities of emission When to initialize the settings of the hidden markov model. As perspectives we can increase the database and type characters used in recognition for the generalization of this method of extraction for the recognition of all Tifinagh characters (33 characters according to IRCAM).

![Figure 14 : The central characteristic zone (CZ) Each character is characterized by five components: NWZ, NEZ, NNZ, NSZ and NCZ. with these latter parameters are the numbers of pixels of value 1 respectively in the characteristic zones West, East, North, South and Central. The vector of extraction will be defined as follows: Vext = [WZ, EZ, NZ, SZ, CZ] With Npixels is a Number of pixels in the image size 150 x 150 WZ = NWZ / (Npixels). EZ = NEZ / (Npixels). NZ = NNZ / (Npixels). SZ = NSZ / (Npixels). CZ = NCZ / (Npixels).](https://computerresearch.org/index.php/computer/article/download/757/version/100287/3_html/3152/image-14.png)

| Handwritten | Numbers of | Manuscripts | Printed | |

| character sets | characters | Test database | Test database | |

| 1 | set | 18 | 62.43 | 60.20 |

| 10 sets | 180 | 78.88 | 60.36 | |

| 20 sets | 360 | 81.57 | 61.86 | |

| 30 sets | 540 | 81.75 | 62.46 | |

| 40 sets | 720 | 84.23 | 63.83 | |

| 50 sets | 900 | 84.91 | 63.88 | |

| Numbers of images for test | 2340 Images | 3060 Images | ||

| Image of character | ||||