1. I. Introduction

n the context of the IT industry, operating within the dynamic and ever-changing external environment, the demands placed on specialist competencies have heightened. Innovating processes has become crucial to navigate the intensifying competition. Adapting the training paradigm for IT specialists is essential to meet these evolving requirements [8].

To streamline and expedite the startup evaluation procedure, the development of software to compute assessments through a defined methodology is imperative. This software should encompass several pivotal processes.

Firstly, the establishment and management of database records are paramount. The software should furnish administrators with the capability to input essential information such as startup author names, startup titles, and the like. Moreover, the system should facilitate the addition of new users and offer other related functionalities [1]. Furthermore, the system should accommodate experts responsible for evaluating startups, enabling them to input assessments based on diverse criteria into the database. This functionality should also extend to the modification or potential deletion of entered data when necessary.

Secondly, the system should incorporate a mechanism for estimating project viability using pertinent formulas derived from the developed methodology. Data requisite for these calculations, notably evaluations corresponding to the established criteria, should be extracted from the database.

Upon calculation, the system should display the outcome in a dedicated interface and record the result within the database. In scenarios where multiple experts assess a single startup, the system must calculate the arithmetic mean of the evaluations and subsequently incorporate this average into the database.

2. II. Literature Review

The concept of a startup is defined as a nascent company, possibly not yet officially registered but with serious intentions to achieve official status. These companies build their foundations on innovation or innovative technologies, with a predominant focus on IT projects. In simpler terms, a startup is a transformative process that converts an idea into a flourishing business.

The significance of developing an automated system for assessing startups within the realm of Software Engineering stems from the challenge of evaluating various startup initiatives in the Software Engineering domain. A comprehensive analysis revealed the absence of a unified methodology for appraising Software Engineering startups. Subsequent to scrutinizing existing methods, the primary criteria and parameters for evaluating Software Engineering startup projects were identified [2].

This methodology amalgamates key evaluation indicators specific to Software Engineering startups and incorporates the consideration of criteria weights. The aim of this study is to analyze existing methods for evaluating startups in the realm of Software Engineering and to develop an automated system that assesses the quality of startups specifically within Software Engineering projects. The focus will be on utilizing software product quality assessment techniques to enhance the evaluation process.

We consider the following assumptions:

? "x" represents a criterion.

? "a" signifies the evaluation of the criterion on a fivepoint scale.

? "b" denotes the weight of the criterion, falling within the range [0.1; 1]. ? "i" represents the criterion number, ranging from [1; 12].

For each criterion with an assigned weight, experts, including admins, entrepreneurs, and specialists from various domains, provide corresponding scores on a five-point scale. The formula for project evaluation is as follows:

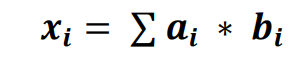

(1)

The resultant evaluation of a startup's Software Engineering projects and developments, according to this method, spans from a minimum of 12 to a maximum of 60. The criteria weights enable the distribution of significance across these evaluations concerning projects, thereby emphasizing the most promising ones.

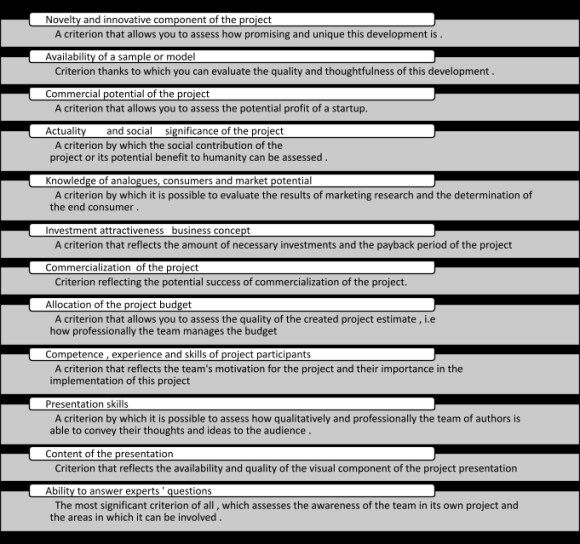

(2) Each of the aforementioned criteria will now be examined in greater detail, as depicted in Fig. 2.

3. Fig. 2: Criterion Values

The extensive array of diverse criteria enables an objective assessment of the user project from multifaceted perspectives. This approach undoubtedly facilitates a comprehensive and detailed depiction of the evaluated startup.

4. IV. Presentation of the Main Material

In the context of startups in the field of Software Engineering, the selection of appropriate and dependable software development tools often proves pivotal in achieving a successful end product.

For the purpose of program development, the integrated environment of Visual Studio 2017, provided by Microsoft, was chosen. The program itself was authored in the modern, object-oriented programming language, C#. Windows Forms technology was employed to craft the graphical user interface.

The database operations were facilitated through various technologies. The widely utilized SQL programming language was employed for database creation and manipulation. InnoDB was selected as the storage mechanism due to its renowned reliability and high performance. To enhance database management and data processing, MySQL Workbench, a graphical tool, was utilized. The connection between the program and the database was established using the freely available and user-friendly MySQL Connector driver.

This software solution offers the functionality of user authorization and registration. Upon successful authorization, users are directed to their respective personalized accounts, where their available actions are contingent upon their assigned role.

A user assigned the role of "User" is restricted to viewing data related to received startup grades. On the other hand, a user designated as a "Admin" possesses the privilege to both view assessments for startups and input new evaluation data. Meanwhile, a user endowed with the "Administrator" role holds comprehensive control. They have the authority to add, edit, or delete data within the system, along with the capability to access any pre-existing data. Additionally, it is the administrator who grants admins access to add and modify assessments.

Conceptual Database Model for Software Engineering Startups

The "User" table serves as the repository for data pertaining to registered users within the system. The table's structure is outlined in the following format (Table 1): In potential future iterations, additional fields such as "Author's Name," "Startup Name," and "Expert's Name" could be incorporated to enhance the data model. Presently, however, the focus is on the streamlined dataset for simplification.

This "Startup" table establishes relationships with the "Admin" and "User" tables. It is linked to the "Admin" table through the "admin_id" field and to the "User" table through the "user_id" field, respectively. This relational structure enhances the representation and management of startup assessment data within the Software Engineering context. 1. The "User" and "Admin" Tables: The "User" and "Admin" tables function independently and are not interlinked with each other or with any other tables. Their sole purpose is to meticulously store essential data concerning registered users and administrators of the system. 2. The "Startup" Table : The "Startup" table serves as a repository for data pertaining to startups that have been assessed by admins. The table's structure is illustrated as follows (Table 3): The subsequent stage involves elucidating the product, service, or technology that will address the customer's predicament and outline what they will be charged for. An illustrative depiction of the solution description is provided below. To assess the quality of this aspect, the "Novelty and Innovative Component of the Project" criterion comes into play. Within this software application, users input data through designated text fields. The determination of the final score follows the formula outlined in the earlier methodology. The program's output is presented in the form of a textual message, while data stored within the database is exhibited in tabular format.

Following successful authorization into the system, facilitated by a unique code provided by the administrator, either the admin or an invited expert responsible for startup evaluation gains access to a window for calculation based on the aforementioned method (Fig. 3). This interface provides a seamless means of conducting evaluations and reflecting our commitment to Software Engineering startup assessment. Year 2022 ( ) C Fig. 3: The Main Window for Calculating the Score Within this context, the user interface presents a side menu featuring buttons such as "Add Points," "Edit," "View Data," and "Enter Final Score to Database." The main panel encompasses fields for data input, along with a prominent button labeled "Calculate the Final Grade."

For reviewing the program's output, clicking the "View Data" button is essential. This action prompts the all previously input or edited data in tabular format (Fig. 4). It is worth noting that future program enhancements could potentially incorporate features for sorting and filtering the displayed data, thereby enhancing user experience and data analysis capabilities. This user interface design aligns with our commitment to effectively evaluate Software Engineering startups.

5. Fig. 4: Data View Window

To access all entered values within the table, utilizing the scroll located at the screen's bottom is necessary. To compute the final assessment, the user is required to input all relevant data (Fig. 6.2). During the assessment calculation process, the system conducts the same data entry correctness checks as during editing. Upon successful data input, clicking the "Calculate the Final Estimate" button triggers the computation process. The outcome is then displayed on the screen as a message. The calculation adheres to the formula outlined in the previous method. According to this formula, the highest attainable score for a user's startup is 60 points, while the minimum score achievable is 12 points. This calculation mechanism underscores our commitment to rigorously evaluating Software Engineering startups.

The ultimate assessment is additionally logged within the database. To review the outcome within the table, it necessitates a subsequent click on the "View Data" button, directing the user to the data viewing window (Fig. 5). The calculated result is observable within the "Final Assessment" column.

Clicking the "View Rating" button leads to the unveiling of a data viewing window. Within this window, the final rating is juxtaposed with the startup's name. The calculation of the final grade follows this procedure: an Year 2022

6. ( ) C

© 2022 Global Journals opening of a dedicated "View Data" window, displaying arithmetic mean is computed from the evaluations furnished by multiple experts, and the resultant average is showcased within the table (Fig. 6). This methodology with our focus on Software Engineering startup assessment. © 2022 Global Journals

7. V. Conclusion

In the process of software development, an indepth investigation and analysis were undertaken to address challenges encountered during the evaluation of startups within the realm of Software Engineering.

A meticulous examination of methods for assessing Software Engineering startup projects was conducted. The following key achievements were attained:

1. The creation of an automated system for evaluating startups in the Software Engineering domain. 2. The development of a comprehensive database to house information pertaining to registered users and assessments issued by experts. 3. The implementation of a user interface featuring the following functionalities.

1. Authorization and user registration. 2. Data editing capabilities for the system administrator. 3. Assessment calculation according to the prescribed methodology and subsequent presentation of the final outcome. 4. Viewing the final grade for student projects.

Furthermore, this bachelor's work was successfully presented at the conference "Modern Problems of Scientific Energy Supply." The report's topic centered around the "Automated System for Evaluating Startups in the Field of Software Engineering."

The proposed comprehensive evaluation method for startup projects within the department holds the potential to guide participants in refining their projects and focusing on critical aspects. Moreover, it facilitates project assessment by customers, aids in entrepreneurial competitions, and supports potential investors. The software was developed as a desktop application, offering utility for teachers in conducting various forms of startup competitions and projects within the realm of Software Engineering. This system's utility extends to a diverse range of scenarios, contributing to the advancement of Software Engineering startup initiatives.

![Fig.1: Evaluation criteria[4]](https://computerresearch.org/index.php/computer/article/download/A-Model-of-an-Automated-System-for-Assessing-the-Quality-of-Startups-in-the-Field-of-Software-Engineering/version/102318/4-A-Model-of-an-Automated-System_html/41441/image-2.png)

| Name | Field type | Description |

| id | int | Unique user ID |

| login | varchar | Unique user login |

| password | varchar | User password |

| name | varchar | Username _ |

| surname | varchar | Username _ |

| The " |

| Name | Field type | Description |

| id | int | The unique identifier of the system administrator |

| name | varchar | Administrator login |

| password | varchar | Administrator password |

| Year 2022 | ||

| Conceptual Database Model for Software | ||

| Engineering Startups | ||

| ) | ||

| ( C | ||

| Name | Field type | Description |

| id_startup | int | The unique identifier of the startup |

| startup_name | varchar | The name of the startup |

| user_id | int | Unique user ID |

| name_user | varchar | Name of the user. It is updated from the user table |

| admin_id | int | Unique identifier of the admin |

| name_admin | varchar | Admin's name. Updated from the admin table |

| rating | double | Final assessment |

| The "Rating" table is designed to house data concerning the ratings provided by admins for each criterion. | ||

| The table's structure is outlined below (Table 4): | ||

| © 2022 Global Journals | ||

| Name | Field type | Description |

| id_rating | int | The unique identifier of the assessment for the startup |

| startup_id | int | The unique identifier of the startup |

| name_startup | varchar | The name of the startup. Updated from the Startup table . |

| Novelty | double | Assessments by criteria |

| sample | double | |

| potential | double | |

| knowledge_analogs | double | |

| relevance | double | |

| answers | double | |

| investments | double | |

| commercial | double | |

| budget | double | |

| experience_skills | double | |

| presentation | double | |

| meaning | double | |

| result | double | The end result |

The "Admin" table comprehensively stores data pertaining to administrators. The table's structure is detailed in the following format (

| Name | Field type | Description |

| id_admin | int | Unique identifier of the admin |

| admin_name | varchar | The admin's name |

| admin_email | varchar | E-mail address of the admin |

| unique_pass | varchar | Admin's unique password |

| Name | Field type | Description |

| id_user | int | Unique user ID |

| user_name | varchar | Name of the user |

| user_email | varchar | User email _ |